the_dunk_tank

It's the dunk tank.

This is where you come to post big-brained hot takes by chuds, libs, or even fellow leftists, and tear them to itty-bitty pieces with precision dunkstrikes.

Rule 1: All posts must include links to the subject matter, and no identifying information should be redacted.

Rule 2: If your source is a reactionary website, please use archive.is instead of linking directly.

Rule 3: No sectarianism.

Rule 4: TERF/SWERFs Not Welcome

Rule 5: No ableism of any kind (that includes stuff like libt*rd)

Rule 6: Do not post fellow hexbears.

Rule 7: Do not individually target other instances' admins or moderators.

Rule 8: The subject of a post cannot be low hanging fruit, that is comments/posts made by a private person that have low amount of upvotes/likes/views. Comments/Posts made on other instances that are accessible from hexbear are an exception to this. Posts that do not meet this requirement can be posted to [email protected]

Rule 9: if you post ironic rage bait im going to make a personal visit to your house to make sure you never make this mistake again

view the rest of the comments

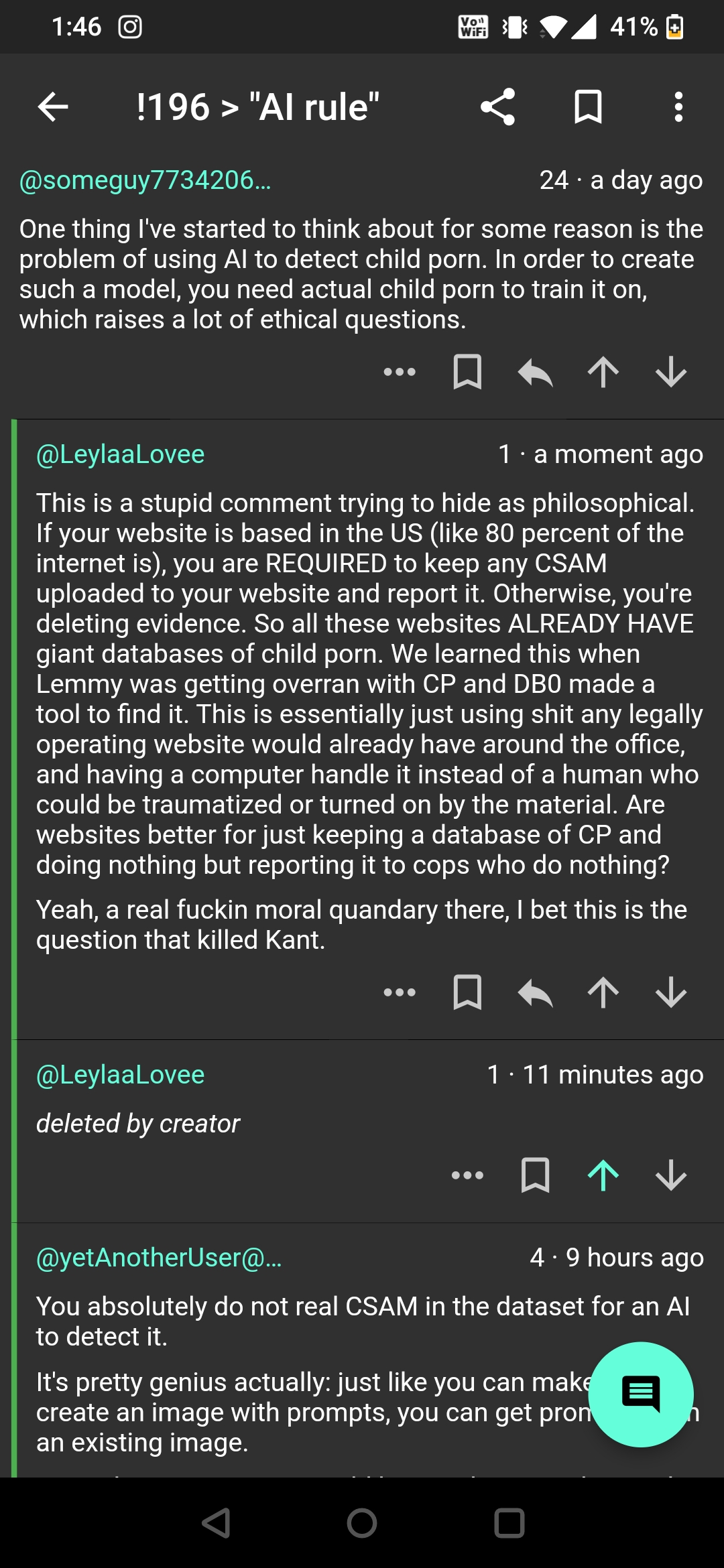

Google already has an ML model that detects this stuff, and they use it to scan everyone's private Google photos.

https://www.eff.org/deeplinks/2022/08/googles-scans-private-photos-led-false-accusations-child-abuse

The must have classified and used a bunch of child porn to train the model and I have no problem with that, it's not generating new CP or abusing anyone. I'm more uncomfortable with them running all our photos through an AI model and sending the results to the US government and not telling the public.

They just run it on photos stored on their servers. Microsoft, Apple, Amazon, and Dropbox also do the same. There are also employees in their security departments with the fkd up job of having to verify anything flagged then alert law enforcement.

Everyone always forgets that "cloud storage" means files are stored on someone else's machine. I don't think anyone, even soulless companies like Google or Microsoft want to be hosting CSAM. So it is understandable that they scan the contents of Google Photos or Microsoft OneDrive, even if they didn't have a legal obligation there is a moral one.