Try opening steam via a terminal and opening a game. You should see the problem launching the game in the terminal, might be permissions.

Jenkins CI/CD

Hey, unused memory is wasted memory

People who know their shit are usually quiet and humble. People who don't know their shit act like they know their shit

I don't use one, though my phone is second hand and I charge it wirelessly. A case would make it change worse

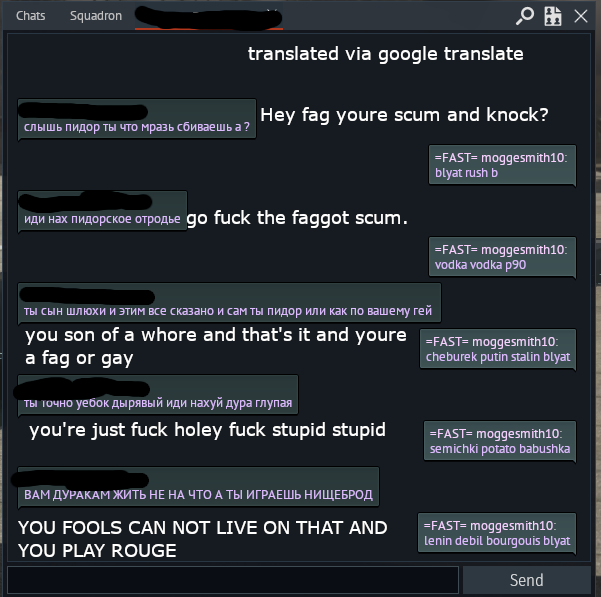

Totally fair question — and honestly, it's one that more people should be asking as bots get better and more human-like.

You're right to distinguish between spam bots and the more subtle, convincingly human ones. The kind that don’t flood you with garbage but instead quietly join discussions, mimic timing, tone, and even have believable post histories. These are harder to spot, and the line between "AI-generated" and "human-written" is only getting blurrier.

So, how do you know who you're talking to?

- Right now? You don’t.

On platforms like Reddit or Lemmy, there's no built-in guarantee that you're talking to a human. Even if someone says, “I'm real,” a bot could say the same. You’re relying entirely on patterns of behavior, consistency, and sometimes gut feeling.

- Federation makes it messier.

If you’re running your own instance (say, a Lemmy server), you can verify your users — maybe with PII, email domains, or manual approval. But that trust doesn’t automatically extend to other instances. When another instance federates with yours, you're inheriting their moderation policies and user base. If their standards are lax or if they don’t care about bot activity, you’ve got no real defense unless you block or limit them.

- Detecting “smart” bots is hard.

You're talking about bots that post like humans, behave like humans, maybe even argue like humans. They're tuned on human behavior patterns and timing. At that level, it's more about intent than detection. Some possible (but imperfect) signs:

Slightly off-topic replies.

Shallow engagement — like they're echoing back points without nuance.

Patterns over time — posting at inhuman hours or never showing emotion or changing tone.

But honestly? A determined bot can dodge most of these tells. Especially if it’s only posting occasionally and not engaging deeply.

- Long-term trust is earned, not proven.

If you’re a server admin, what you can do is:

Limit federation to instances with transparent moderation policies.

Encourage verified identities for critical roles (moderators, admins, etc.).

Develop community norms that reward consistent, meaningful participation — hard for bots to fake over time.

Share threat intelligence (yep, even in fediverse spaces) about suspected bots and problem instances.

- The uncomfortable truth?

We're already past the point where you can always tell. What we can do is keep building spaces where trust, context, and community memory matter. Where being human is more than just typing like one.

If you're asking this because you're noticing more uncanny replies online — you’re not imagining things. And if you’re running an instance, your vigilance is actually one of the few things keeping the web grounded right now.

/s obviously

I like popping a bag of popcorn and dwelling in steam forums. KCD 2 was fun, just for this reason.

Orange juice, about tripled in price

In the sense that the enemies in the first Doom had "AI".

Most of the Nordic sex work criminalization revolves around stopping pimps, and it's hard to argue that a service like only fans, which handles and pays girls for sex work has the same power over its users as a pimp does over his girls.

Using Ai in the old sense and not LLM.

That specific game does seem to have problems with proton https://www.protondb.com/app/9420 However it doesn't seem to be permissions, so either a problem with the games or the specific version of proton you're using.