Audalin

What I've ultimately converged to without any rigorous testing is:

- using Q6 if it fits in VRAM+RAM (anything higher is a waste of memory and compute for barely any gain), otherwise either some small quant (rarely) or ignoring the model altogether;

- not really using IQ quants - afair they depend on a dataset and I don't want the model's behaviour to be affected by some additional dataset;

- other than the Q6 thing, in any trade-offs between speed and quality I choose quality - my usage volumes are low and I'd better wait for a good result;

- I load as much as I can into VRAM, leaving 1-3GB for the system and context.

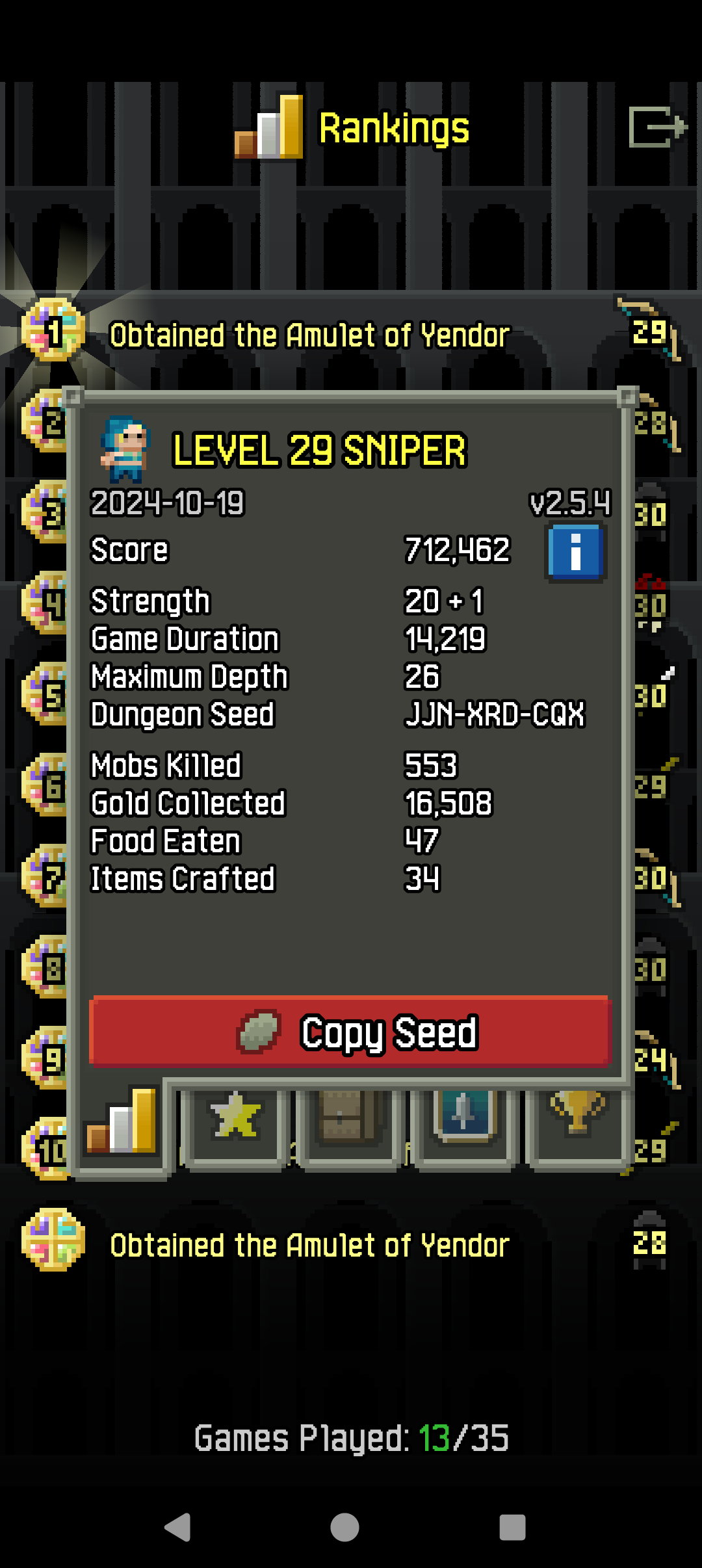

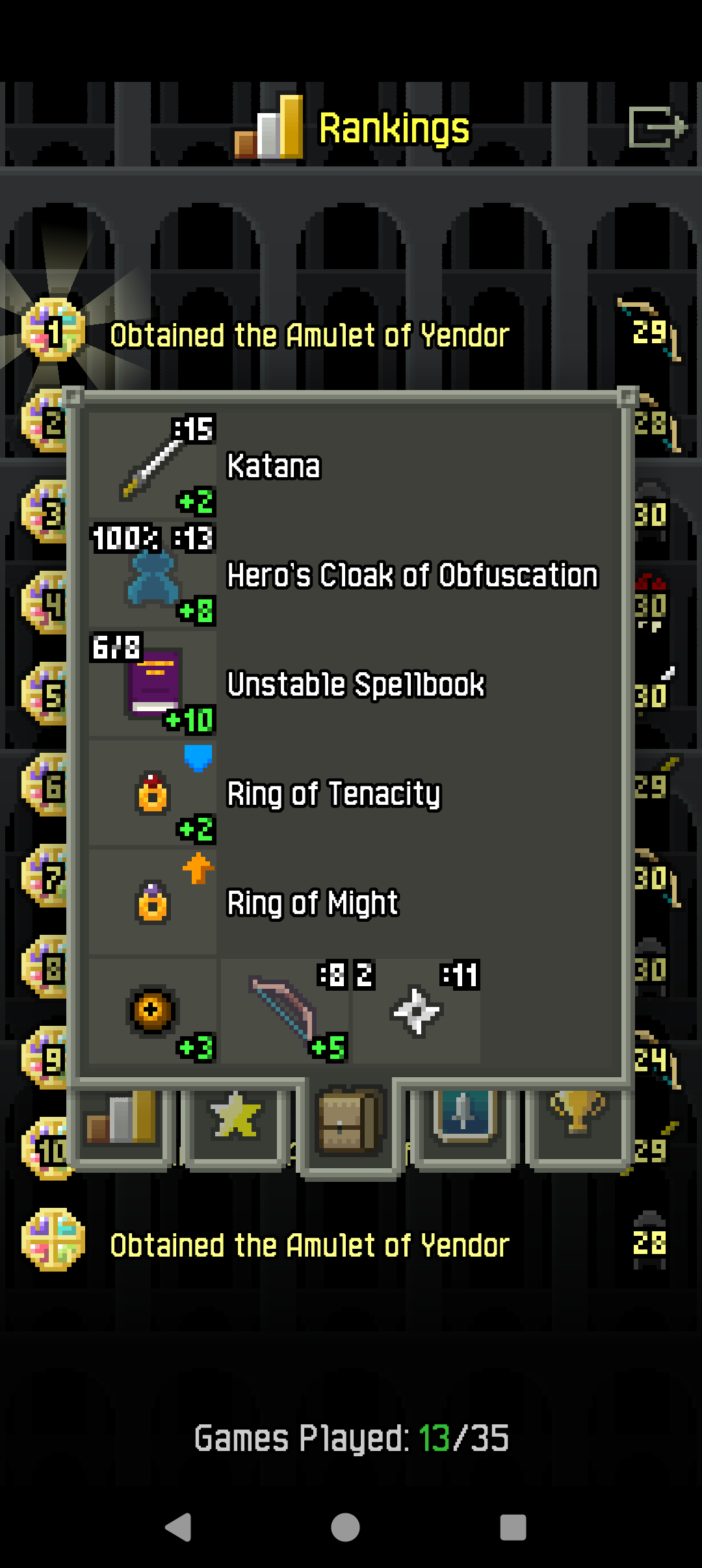

Oh, forgot about healing wells, thanks for the reminder. You should probably be able to throw the ankh directly too? But I don't encounter them every run (e.g. didn't have any this one) so they aren't reliable.

I know ascending is easy (did it many times, though only with 0-1 challenges, none of them Swarm Intelligence) and adds a 1.25 multiplier and I'll do it when I go for that badge - but I didn't plan for it (thought 6 challenges would be 2-3x harder than it turned out) so I wasn't prepared to ascend this run. I'd have probably died in the 21-24 zone.

So you think it should be On Diet? Hmm, maybe. But exploration with both On Diet and Into Darkness will be challenging.

My intuition:

- There're "genuine" instances of hapax legomena which probably have some semantic sense, e.g. a rare concept, a wordplay, an artistic invention, an ancient inside joke.

- There's various noise because somebody let their cat on the keyboard, because OCR software failed in one small spot, because somebody was copying data using a noisy channel without error correction, because somebody had a headache and couldn't be bothered, because whatever.

- Once a dataset is too big to be manually reviewed by experts, the amount of general noise is far far far larger than what you're looking for. At the same time you can't differentiate between the two using statistics alone. And if it was manually reviewed, the experts have probably published their findings, or at least told a few colleagues.

- Transformers are VERY data-hungry. They need enormous datasets.

So I don't think this approach will help you a lot even for finding words and phrases. And everything I've said can be extended to semantic noise too, so your extended question also seems a hopeless endeavour when approached specifically with LLMs or big data analysis of text.

Of course:

The rest of the instructions are all valid n-controlled Toffolis and Hadamards, but of course mostly Toffolis since it's replicating a classical algorithm. There is no quantum advantage, it's just a classical algorithm written in a format compatible with a quantum computer.

Add small errors to the quantum simulator (quantum computers always have those) and all'll break entirely - apparently (1) no error correction was used and (2) it's just logic gates for Doom rewritten as quantum gates. No wonder the author got bored, I'd be bored too.

Thanks! I now see that Tai Chi is mentioned frequently online in context of the film unlike yoga so that should be right; it narrows things down.