the_dunk_tank

It's the dunk tank.

This is where you come to post big-brained hot takes by chuds, libs, or even fellow leftists, and tear them to itty-bitty pieces with precision dunkstrikes.

Rule 1: All posts must include links to the subject matter, and no identifying information should be redacted.

Rule 2: If your source is a reactionary website, please use archive.is instead of linking directly.

Rule 3: No sectarianism.

Rule 4: TERF/SWERFs Not Welcome

Rule 5: No ableism of any kind (that includes stuff like libt*rd)

Rule 6: Do not post fellow hexbears.

Rule 7: Do not individually target other instances' admins or moderators.

Rule 8: The subject of a post cannot be low hanging fruit, that is comments/posts made by a private person that have low amount of upvotes/likes/views. Comments/Posts made on other instances that are accessible from hexbear are an exception to this. Posts that do not meet this requirement can be posted to [email protected]

Rule 9: if you post ironic rage bait im going to make a personal visit to your house to make sure you never make this mistake again

view the rest of the comments

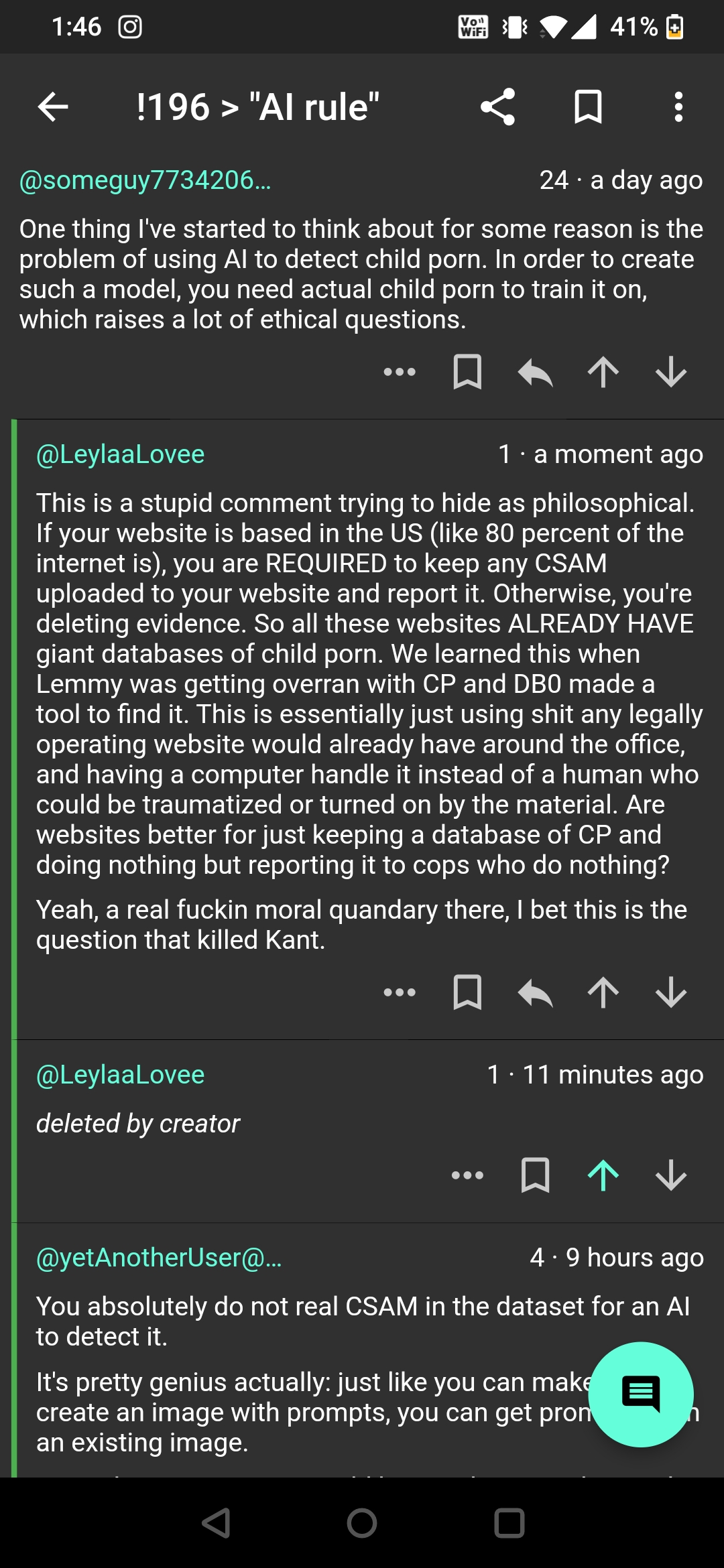

Bottom comment is technically correct, you can bypass any dataset-related ethical concerns. You could very easily make a classifier model that just tries to find age and combine it with one of many existing NSFW classifiers, flagging any image that scores high for both.

But there are already organizations which have large databases of CSAM that are suitable for this purpose, using it to train a model would not create any additional demand, it would not result in any more harm, and it would likely result in a better model. Keep in mind that these same organizations already use these images in the form of a perceptual hash database that social media and adult content sites can check uploaded images against to see if they are known images while also not sharing the images themselves. This is just a different use of the data for a similar purpose.

The only actual problem I could think of would be if people trust its outputs blindly instead of manually reviewing images and start reporting everything that scores high directly to the police, but that is more of a problem with inappropriate use of the model than it is with the training or existence of the model. It's very safe to respond like this to images flagged by NCMEC's phash database because those are known images and if any false positives happen they have the originals to compare to so they can be cleared up, but even if you get a 99% accurate classifier model, you will still have something that is orders of magnitude more prone to false positives than the phash database, and it can be very difficult to find out why it generates false positives and correct the problem, because... well, it involves extensive auditing of the dataset. I don't think this is enough reason to not make such a model, but it is a reason to tread carefully when looking at how to apply it.