3TOFU: Verifying Unsigned Releases

By Michael Altfield

License: CC BY-SA 4.0

https://tech.michaelaltfield.net/

This article introduces the concept of "3TOFU" -- a harm-reduction process when downloading software that cannot be verified cryptographically.

|

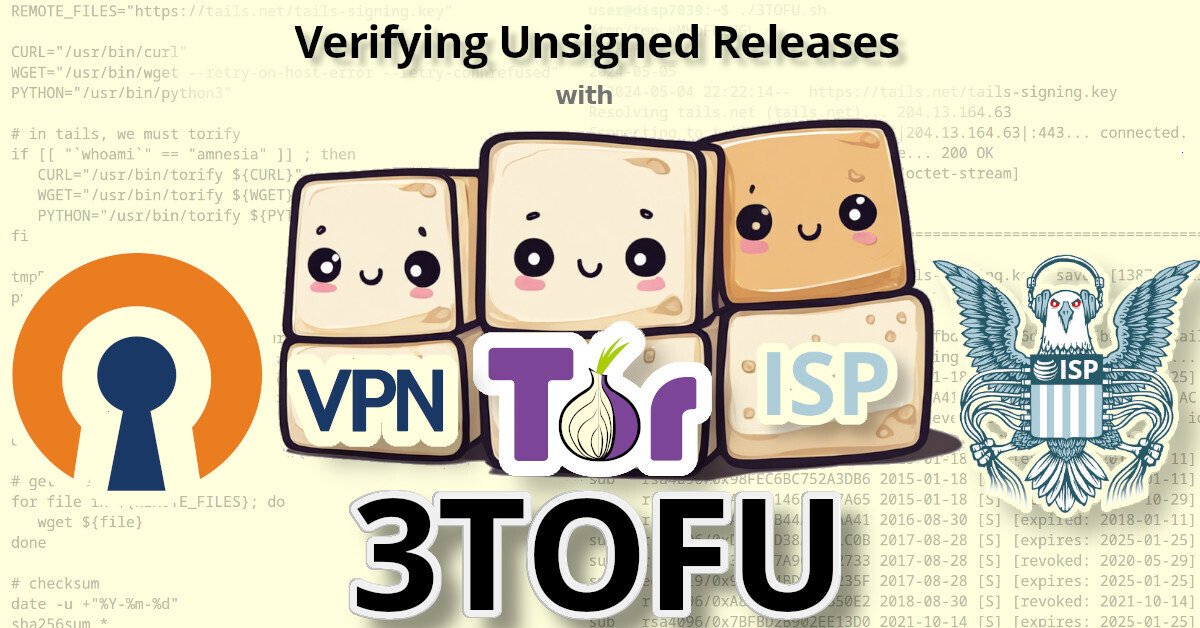

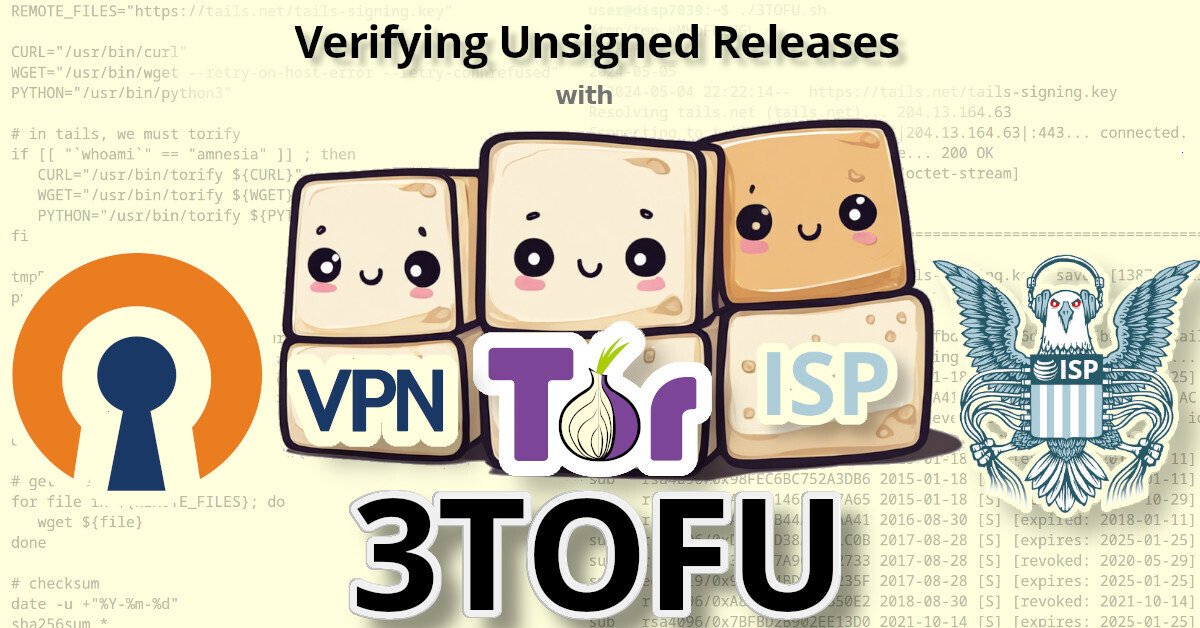

| Verifying Unsigned Releases with 3TOFU |

⚠ NOTE: This article is about harm reduction.

It is dangerous to download and run binaries (or code) whose authenticity you cannot verify (using a cryptographic signature from a key stored offline). However, sometimes we cannot avoid it. If you're going to proceed with running untrusted code, then following the steps outlined in this guide may reduce your risk.

TOFU

TOFU stands for Trust On First Use. It's a (often abused) concept of downloading a person or org's signing key and just blindly trusting it (instead of verifying it).

3TOFU

3TOFU is a process where a user downloads something three times at three different locations. If-and-only-if all three downloads are identical, then you trust it.

Why 3TOFU?

During the Crypto Wars of the 1990s, it was illegal to export cryptography from the United States. In 1996, after intense public pressure and legal challenges, the government officially permitted export with the 56-bit DES cipher -- which was a known-vulnerable cipher.

|

| The EFF's Deep Crack proved DES to be insecure and pushed a switch to 3DES. |

But there was a simple way to use insecure DES to make secure messages: just use it three times.

3DES (aka "Triple DES") is the process encrypting a message using the insecure symmetric block cipher (DES) three times on each block, to produce an actually secure message (from known attacks at the time).

3TOFU (aka "Triple TOFU") is the process of downloading a payload using the insecure method (TOFU) three times, to obtain the payload that's magnitudes less likely to be maliciously altered.

3TOFU Process

To best mitigate targeted attacks, 3TOFU should be done:

- On three distinct days

- On three distinct machines (or VMs)

- Exiting from three distinct countries

- Exiting using three distinct networks

For example, I'll usually execute

- TOFU #1/3 in TAILS (via Tor)

- TOFU #2/3 in a Debian VM (via VPN)

- TOFU #3/3 on my daily laptop (via ISP)

The possibility of an attacker maliciously modifying something you download over your ISP's network are quite high, depending on which country you live-in.

The possibility of an attacker maliciously modifying something you download onto a VM with a freshly installed OS over an encrypted VPN connection (routed internationally and exiting from another country) is much less likely, but still possible -- especially for a well-funded adversary.

The possibility of an attacker maliciously modifying something you download onto a VM running a hardened OS (like Whonix or TAILS) using a hardened browser (like Tor Browser) over an anonymizing network (like Tor) is quite unlikely.

The possibility for someone to execute a network attack on all three downloads is very near-zero -- especially if the downloads were spread-out over days or weeks.

3TOFU bash Script

I provide the following bash script as an example snippet that I run for each of the 3TOFUs.

REMOTE_FILES="https://tails.net/tails-signing.key"

CURL="/usr/bin/curl"

WGET="/usr/bin/wget --retry-on-host-error --retry-connrefused"

PYTHON="/usr/bin/python3"

# in tails, we must torify

if [[ "`whoami`" == "amnesia" ]] ; then

CURL="/usr/bin/torify ${CURL}"

WGET="/usr/bin/torify ${WGET}"

PYTHON="/usr/bin/torify ${PYTHON}"

fi

tmpDir=`mktemp -d`

pushd "${tmpDir}"

# first get some info about our internet connection

${CURL} -s https://ifconfig.co/country | head -n1

${CURL} -s https://check.torproject.org/ | grep Congratulations | head -n1

# and today's date

date -u +"%Y-%m-%d"

# get the file

for file in ${REMOTE_FILES}; do

wget ${file}

done

# checksum

date -u +"%Y-%m-%d"

sha256sum *

# gpg fingerprint

gpg --with-fingerprint --with-subkey-fingerprint --keyid-format 0xlong *

Here's one example execution of the above script (on a debian DispVM, executed with a VPN).

/tmp/tmp.xT9HCeTY0y ~

Canada

2024-05-04

--2024-05-04 14:58:54-- https://tails.net/tails-signing.key

Resolving tails.net (tails.net)... 204.13.164.63

Connecting to tails.net (tails.net)|204.13.164.63|:443... connected.

HTTP request sent, awaiting response... 200 OK

Length: 1387192 (1.3M) [application/octet-stream]

Saving to: ‘tails-signing.key’

tails-signing.key 100%[===================>] 1.32M 1.26MB/s in 1.1s

2024-05-04 14:58:56 (1.26 MB/s) - ‘tails-signing.key’ saved [1387192/1387192]

2024-05-04

8c641252767dc8815d3453e540142ea143498f8fbd76850066dc134445b3e532 tails-signing.key

gpg: WARNING: no command supplied. Trying to guess what you mean ...

pub rsa4096/0xDBB802B258ACD84F 2015-01-18 [C] [expires: 2025-01-25]

Key fingerprint = A490 D0F4 D311 A415 3E2B B7CA DBB8 02B2 58AC D84F

uid Tails developers (offline long-term identity key) <[email protected]>

uid Tails developers <[email protected]>

sub rsa4096/0x3C83DCB52F699C56 2015-01-18 [S] [expired: 2018-01-11]

sub rsa4096/0x98FEC6BC752A3DB6 2015-01-18 [S] [expired: 2018-01-11]

sub rsa4096/0xAA9E014656987A65 2015-01-18 [S] [revoked: 2015-10-29]

sub rsa4096/0xAF292B44A0EDAA41 2016-08-30 [S] [expired: 2018-01-11]

sub rsa4096/0xD21DAD38AF281C0B 2017-08-28 [S] [expires: 2025-01-25]

sub rsa4096/0x3020A7A9C2B72733 2017-08-28 [S] [revoked: 2020-05-29]

sub ed25519/0x90B2B4BD7AED235F 2017-08-28 [S] [expires: 2025-01-25]

sub rsa4096/0xA8B0F4E45B1B50E2 2018-08-30 [S] [revoked: 2021-10-14]

sub rsa4096/0x7BFBD2B902EE13D0 2021-10-14 [S] [expires: 2025-01-25]

sub rsa4096/0xE5DBA2E186D5BAFC 2023-10-03 [S] [expires: 2025-01-25]

The TOFU output above shows that the release signing key from the TAILS project is a 4096-bit RSA key with a full fingerprint of "A490 D0F4 D311 A415 3E2B B7CA DBB8 02B2 58AC D84F". The key file itself has a sha256 hash of "8c641252767dc8815d3453e540142ea143498f8fbd76850066dc134445b3e532".

When doing a 3TOFU, save the output of each execution. After collecting output from all 3 executions (intentionally spread-out over 3 days or more), diff the output.

If the output of all three TOFUs match, then the confidence of the file's authenticity is very high.

Why do 3TOFU?

Unfortunately, many developers think that hosting their releases on a server with https is sufficient to protect their users from obtaining a maliciously-modified release. But https won't protect you if:

- Your DNS or publishing infrastructure is compromised (it happens), or

- An attacker has just one (subordinate) CA in the user's PKI root store (it happens)

Generally speaking, publishing infrastructure compromises are detected and resolved within days and MITM attacks using compromised CAs are targeted attacks (to avoid detection). Therefore, a 3TOFU verification should thwart these types of attacks.

⚠ Note on hashes: Unfortunately, many well-meaning developers erroneously think that cryptographic hashes provide authenticity, but cryptographic hashes do not provide authenticity -- they provide integrity.

Integrity checks are useful to detect corrupted data on-download; it does not protect you from maliciously altered data unless those hashes are cryptographically signed with a key whose private key isn't stored on the publishing infrastructure.

Improvements

There are some things you can do to further improve the confidence of the authenticity of a file you download from the internet.

Distinct Domains

If possible, download your payload from as many distinct domains as possible.

An adversary may successfully compromise the publishing infrastructure of a software project, but it's far less likely for them to compromise the project website (eg 'tails.net') and their forge (eg 'github.com') and their mastodon instance (eg 'mastodon.social').

Use TAILS

|

| TAILS is by far the best OS to use for security-critical situations. |

If you are a high-risk target (investigative journalist, activist, or political dissident) then you should definitely use TAILS for one of your TOFUs.

Signature Verification

It's always better to verify the authenticity of a file using cryptographic signatures than with 3TOFU.

Unfortunately, some companies like Microsoft don't sign their releases, so the only option to verify the authenticity of something like a Windows .iso is with 3TOFU.

Still, whenever you encounter some software that is not signed using an offline key, please do us all a favor and create a bug report asking the developer to sign their releases with PGP (or minisign or signify or something).

4TOFU

3TOFU is easy because Tor is free and most people have access to a VPN (corporate or commercial or an ssh socks proxy).

But, if you'd like, you could also add i2p or some other proxy network into the mix (and do 4TOFU).