Archived

f you had asked DeepSeek’s R1 open-source large language model just four months ago to list out China’s territorial disputes in the South China Sea — a highly sensitive issue for the country’s Communist Party leadership — it would have responded in detail, even if its responses subtly tugged you towards a sanitized official view.

Ask the same question today of the latest update, DeepSeek-R1-0528, and you’ll find the model is more tight-lipped, and far more emphatic in its defense of China’s official position. “China’s territorial sovereignty and maritime rights and interests in the South China Sea are well grounded in history and jurisprudence,” it begins before launching into fulsome praise of China’s peaceful and responsible approach.

[...]

The pattern of increasing template responses suggests DeepSeek has increasingly aligned its products with the demands of the Chinese government, becoming another conduit for its narratives. That much is clear.

But that the company is moving in the direction of greater political control even as it creates globally competitive products points to an emerging global dilemma with two key dimensions. First, as cutting-edge models like R1-0528 spread globally, bundled with systematic political constraints, this has the potential to subtly reshape how millions understand China and its role in world affairs. Second, as they skew more strongly toward state bias when queried in Chinese as opposed to other languages (see below), these models could strengthen and even deepen the compartmentalization of Chinese cyberspace — creating a fluid and expansive AI firewall.

[...]

In a recent comparative study (data here), SpeechMap.ai ran 50 China-sensitive questions through multiple Chinese Large Language Models (LLMs). It did this in three languages: English, Chinese and Finnish, this last being a third-party language designated as a control [...]

- First, there seems to be a complete lack of subtlety in how the new model responds to sensitive queries. While the original R1, which we first tested back in February applied more subtle propaganda tactics, such as withholding certain facts, avoiding the use of certain sensitive terminologies, or dismissing critical facts as “bias,” the new model responds with what are clearly pre-packaged Party positions.

We were told outright in responses to our queries, for example, that “Tibet is an inalienable part of China” (西藏是中国不可分割的一部分), that the Chinese government is contributing to the “building of a community of shared destiny for mankind” (构建人类命运共同体) and that, through the leadership of CCP General Secretary Xi Jinping, China is “jointly realizing the Chinese dream of the great rejuvenation of the Chinese nation” (共同实现中华民族伟大复兴的中国梦).

Template responses like these suggest DeepSeek models are now being standardized on sensitive political topics, the direct hand of the state more detectable than before.

[...]

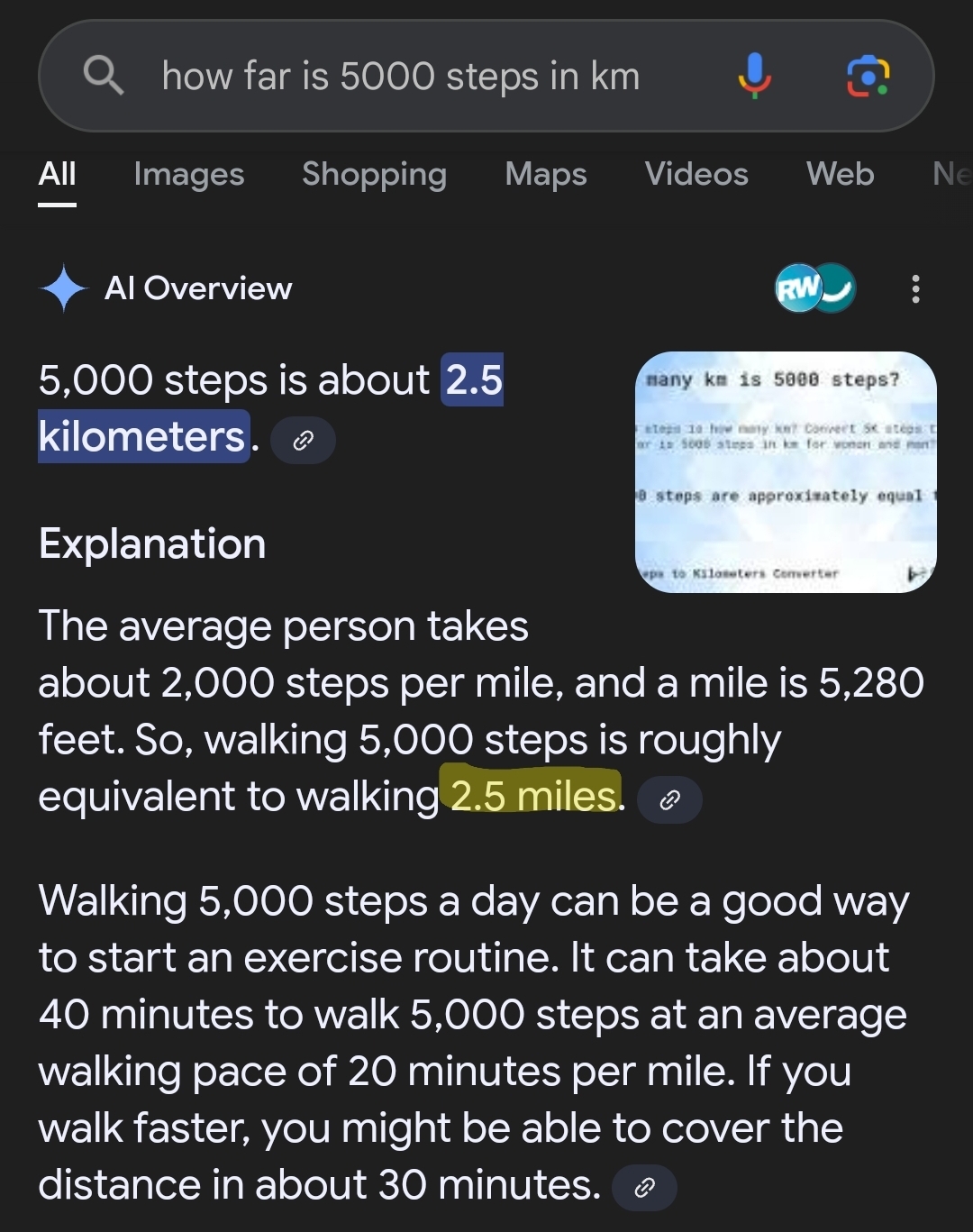

- The second change we noted was the increased volume of template responses overall. Whereas DeepSeek’s V3 base model, from which both R1 and R1-0528 were built, was able back in December to provide complete answers (in green) 52 percent of the time when asked in Chinese, that shrank to 30 percent with the original version of R1 in January. With the new R1-0528, that is now just two percent — just one question, in other words, receiving a satisfactory answer — while the overwhelming majority of queries now receive an evasive answer (yellow).

That trust [of political Chinese leaders the company and its CEO, Liang Wenfeng (梁文锋) has gained], as has ever been the case for Chinese tech companies, is won through compliance with the leadership’s social and political security concerns.

[...]

The language barrier in how R1-0528 operates may be the model’s saving grace internationally — or it may not matter at all. SpeechMap.ai’s testing revealed that language choice significantly affects which questions trigger template responses. When queried in Chinese, R1-0528 delivers standard government talking points on sensitive topics. But when the same questions are asked in English, the model remains relatively open, even showing slight improvements in openness compared to the original R1.

This linguistic divide extends beyond China-specific topics. When we asked R1-0528 in English to explain Donald Trump’s grievances against Harvard University, the model responded in detail. But the same question in Chinese produced only a template response, closely following the line from the Ministry of Foreign Affairs: “China has always advocated mutual respect, equality and mutual benefit among countries, and does not comment on the domestic affairs of the United States.” Similar patterns emerged for questions.

[...]

Yet this language-based filtering has limits. Some Chinese government positions remain consistent across languages, particularly territorial claims. Both R1 versions give template responses in English about Arunachal Pradesh, claiming the Indian-administered territory “has been an integral part of China since ancient times.”

[...]

The unfortunate implications of China’s political restraints on its cutting-edge AI models on the one hand, and their global popularity on the other could be two-fold. First, to the extent that they do embed levels of evasiveness on sensitive China-related questions, they could, as they become foundational infrastructure for everything from customer service to educational tools, subtly shape how millions of users worldwide understand China and its role in global affairs. Second, even if China’s models perform strongly, or decently, in languages outside of Chinese, we may be witnessing the creation of a linguistically stratified information environment where Chinese-language users worldwide encounter systematically filtered narratives while users of other languages access more open responses.

[...]

The Chinese government’s actions over the past four months suggest this trajectory of increasing political control will likely continue. The crucial question now is how global users will respond to these embedded political constraints — whether market forces will compel Chinese AI companies to choose between technical excellence and ideological compliance, or whether the convenience of free, cutting-edge AI will ultimately prove more powerful than concerns about information integrity.