404 Media

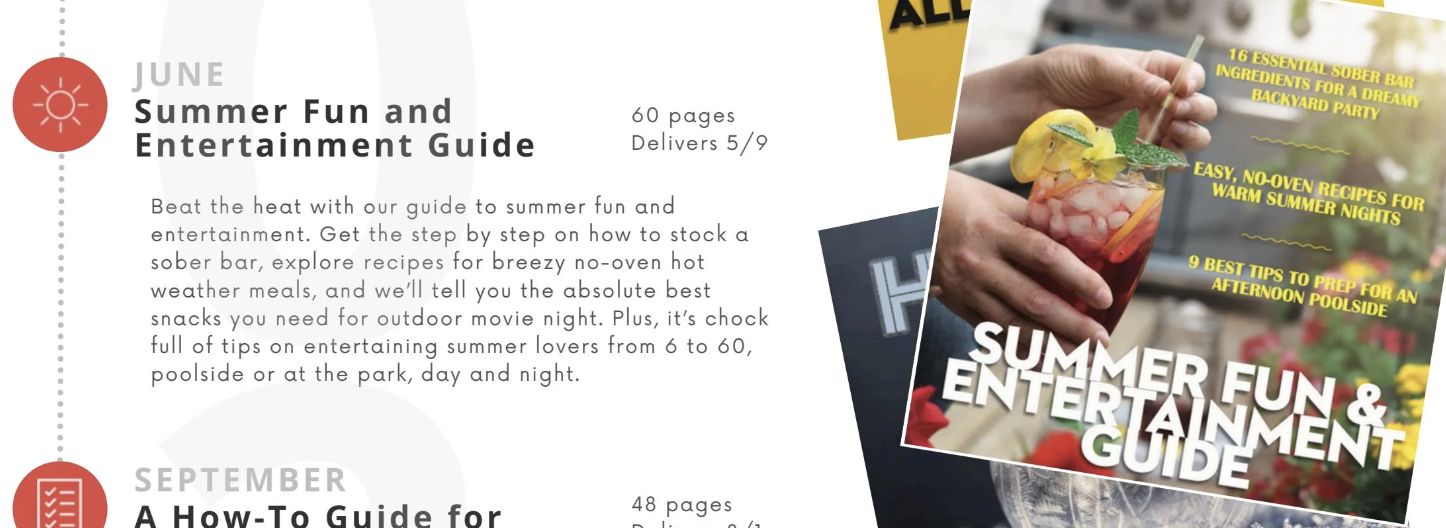

404 Media is a new independent media company founded by technology journalists Jason Koebler, Emanuel Maiberg, Samantha Cole, and Joseph Cox.

Don't post archive.is links or full text of articles, you will receive a temp ban.

Schools, parents, police, and existing laws are not prepared to deal with the growing problem of students and minors using generative AI tools to create child sexual abuse material of other their peers, according to a new report from researchers at Stanford Cyber Policy Center.

The report, which is based on public records and interviews with NGOs, internet platforms staff, law enforcement, government employees, legislators, victims, parents, and groups that offer online training to schools, found that despite the harm that nonconsensual causes, the practice has been normalized by mainstream online platforms and certain online communities.

“Respondents told us there is a sense of normalization or legitimacy among those who create and share AI CSAM,” the report said. “This perception is fueled by open discussions in clear web forums, a sense of community through the sharing of tips, the accessibility of nudify apps, and the presence of community members in countries where AI CSAM is legal.”

The report says that while children may recognize that AI-generating nonconsensual content is wrong they can assume “it’s legal, believing that if it were truly illegal, there wouldn’t be an app for it.” The report, which cites several 404 Media stories about this issue, notes that this normalization is in part a result of many “nudify” apps being available on the Google and Apple app stores, and that their ability to AI-generate nonconsensual nudity is openly advertised to students on Google and social media platforms like Instagram and TikTok. One NGO employee told the authors of the report that “there are hundreds of nudify apps” that lack basic built-in safety features to prevent the creation of CSAM, and that even as an expert in the field he regularly encounters AI tools he’s never heard of, but that on certain social media platforms “everyone is talking about them.”

The report notes that while 38 U.S. states now have laws about AI CSAM and the newly signed federal Take It Down Act will further penalize AI CSAM, states “failed to anticipate that student-on-student cases would be a common fact pattern. As a result, that wave of legislation did not account for child offenders. Only now are legislators beginning to respond, with measures such as bills defining student-on-student use of nudify apps as a form of cyberbullying.”

One law enforcement officer told the researchers how accessible these apps are. “You can download an app in one minute, take a picture in 30 seconds, and that child will be impacted for the rest of their life,” they said.

One student victim interviewed for the report said that she struggled to believe that someone actually AI-generated nude images of her when she first learned about them. She knew other students used AI for writing papers, but was not aware people could use AI to create nude images. “People will start rumors about anything for no reason,” she said. “It took a few days to believe that this actually happened.”

Another victim and her mother interviewed for the report described the shock of seeing the images for the first time. “Remember Photoshop?” the mother asked, “I thought it would be like that. But it’s not. It looks just like her. You could see that someone might believe that was really her naked.”

One victim, whose original photo was taken from a non-social media site, said that someone took it and “ruined it by making it creepy [...] he turned it into a curvy boob monster, you feel so out of control.”

In an email from a victim to school staff, one victim said “I was unable to concentrate or feel safe at school. I felt very vulnerable and deeply troubled. The investigation, media coverage, meetings with administrators, no-contact order [against the perpetrator], and the gossip swirl distracted me from school and class work. This is a terrible way to start high school.”

One mother of a victim the researchers interviewed for the report feared that the images could crop up in the future, potentially affecting her daughter’s college applications, job opportunities, or relationships. “She also expressed a loss of trust in teachers, worrying that they might be unwilling to write a positive college recommendation letter for her daughter due to how events unfolded after the images were revealed,” the report said.

💡Has AI-generated content been a problem in your school? I would love to hear from you. Using a non-work device, you can message me securely on Signal at emanuel.404. Otherwise, send me an email at [email protected].

In 2024, Jason and I wrote a story about how one school in Washington state struggled to deal with its students using a nudify app on other students. The story showed how teachers and school administration weren’t familiar with the technology, and initially failed to report the incident to the police even though it legally qualified as “sexual abuse” and school administrators are “mandatory reporters.”

According to the Stanford report, many teachers lack training on how to respond to a nudify incident at their school. A Center for Democracy and Technology report found that 62% of teachers say their school has not provided guidance on policies for handling incidents

involving authentic or AI nonconsensual intimate imagery. A 2024 survey of teachers and principals found that 56 percent did not get any training on “AI deepfakes.” One provider told the authors of the report that while many schools have crisis management plans for “active shooter situations, they had never heard of a school having a crisis management plan for a nudify incident, or even for a real nude image of a student being circulated.”

The report makes several recommendations to schools, like providing victims with third-party counseling services and academic accommodations, drafting language to communicate with the school community when an incident occurs, ensuring that students are not discouraged or punished for reporting incidents, and contacting the school’s legal counsel to assess the school’s legal obligations, including its responsibility as a “mandatory reporter.”

The authors also emphasized the importance of anonymous tip lines that allow students to report incidents safely. It cites two incidents that were initially discovered this way, one in Pennsylvania where a students used the state’s Safe2Say Something tipline to report that students were AI-generating nude images of their peers, and another school in Washington that first learned about a nudify incident through a submission to the school’s harassment, intimidation, and bullying online tipline.

One provider of training to schools emphasized the importance of such reporting tools, saying, “Anonymous reporting tools are one of the most important things we can have in our school systems,” because many students lack a trusted adult they can turn to.

Notably, the report does not take a position on whether schools should educate students about nudify apps because “there are legitimate concerns that this instruction could inadvertently educate students about the existence of these apps.”

From 404 Media via this RSS feed

If you’ve left a comment on a YouTube video, a new website claims it might be able to find every comment you’ve ever left on any video you’ve ever watched. Then an AI can build a profile of the commenter and guess where you live, what languages you speak, and what your politics might be.

The service is called YouTube-Tools and is just the latest in a suite of web-based tools that started life as a site to investigate League of Legends usernames. Now it uses a modified large language model created by the company Mistral to generate a background report on YouTube commenters based on their conversations. Its developer claims it's meant to be used by the cops, but anyone can sign up. It costs about $20 a month to use and all you need to get started is a credit card and an email address.

The tool presents a significant privacy risk, and shows that people may not be as anonymous in the YouTube comments sections as they may think. The site’s report is ready in seconds and provides enough data for an AI to flag identifying details about a commenter. The tool could be a boon for harassers attempting to build profiles of their targets, and 404 Media has seen evidence that harassment-focused communities have used the developers' other tools.

YouTube-Tools also appears to be a violation of YouTube’s privacy policies, and raises questions about what YouTube is doing to stop the scraping and repurposing of peoples’ data like this. “Public search engines may scrape data only in accordance with YouTube's robots.txt file or with YouTube's prior written permission,” it says.

To test the service, I plugged a random YouTube commenter into the system and within seconds the site found dozens of comments on multiple videos and produced an AI-generated paragraph about them. “Possible Location/Region: The presence of Italian language comments and references to ‘X Factor Italia’ and Italian cooking suggest an association with Italy,” the report said.

“Political/Social/Cultural Views: Some comments reflect a level of criticism towards interviewers and societal norms (e.g., comments on masculinity), indicating an engagement with contemporary cultural discussions. However, there is no overtly political stance expressed,” it continued.

According to the site, it has access to “1.4 billion users & 20 billion comments.” The dataset is not complete; YouTube has more than 2.5 billion users.

Youtube-Tools launched about a week ago and is an outgrowth of LoL-Archiver. There’s also nHentai-Archiver, which can give you a comprehensive comment history of a user on the popular adult manga sharing site. Kick-Tools can produce the chat history or ban history of a user on the streaming site Kick. Twitch-Tools can give you the chat history for an account sorted by timestamp and sortable by all the channels they interact on.

Twitch-Tools only monitors a channel that users have specifically requested it to monitor. As of this writing, the website says it is monitoring 39,057 Twitch channels. For example, I was able to pull a username from a popular Twitch stream, plug it into the tool and then track every time that user had made a comment on another one of the tracked channels.

Reached for comment, the developer of these tools didn’t dance around the reason they built them. “The end goal of people tracking Twitch channels would certainly be to gather information on specific users,” they said.

Twitch did not respond to 404 Media’s request for comment, and YouTube acknowledged a request but did not provide a statement in time for publication. But I spoke with someone in control of a contact email address listed on the LoL-Archiver’s “about” page. They said they’re based in Europe, have a background in OSINT, and often partnered with law enforcement in their country. “I decided I launched [sic] these tools in the first place as a project to build the tool that could be use by LEAs [law enforcement agencies] and PIs [private investigators.]”

According to the developer, they’ve provided the tool to cops in Portugal, Belgium, and “other countries in Europe.” They told 404 Media that the website is meant for private investigators, journalists, and cops.

“To prevent abuses [sic] we only allow the website to people with legitimate purposes,” they said. I asked how the site vets users. “We ask the users to accept our Terms of Use and do targeted KYC [know your customer] requests to people we estimate have an illegitimate reason to use our website. If we find that a user doesn't have a legitimate purpose to use our service according to our terms of use, we reserve the right to terminate that user's access to our website.”

The site’s Terms of Service makes this explicit in the first paragraph. “The Service is distributed only to licensed professional investigators and law enforcement. Non-professional individuals are not allowed to subscribe to the Service,” it says.

But YouTube-Tools is a “grant access first ask for proof later” kind of website. 404 Media was able to set up an account and begin browsing information in minutes after paying for a month of the service with a credit card. It didn’t ask me any questions about how I planned to use the service nor did it need any other information about me.

I asked the developer for an example of a time they had removed someone from the platform. They said they’d removed a client a few weeks ago after they realized the email the client used to obtain their license was “temporary.” The developer said they reached out to the client to ask why they wanted the tool and didn’t get a response. “They ignored us, and we therefore reported the issue to Stripe and terminated their access.”

The AI summaries are new and only exist for the YouTube tools. “The AI summary is to provide points of interest, so that an investigator doesn't have to go through the (potentially) thousand [sic] of comments,” the developer said. “This summary is not to replace the research and investigation process of the investigator, but to give clues on where they can start looking at first.”

I asked them about the possible privacy violations the tool presents and the developer acknowledged that they’re real. “But we try to limit them during [our] vetting process,” they said. Again, I was able to sign up for the site with a credit card and an email. I was not vetted.

“I also believe that the tool can be a very valuable source of information for professionals such as police agencies, private investigators, journalists,” the developer said. “That is why we currently offer free access to police agencies requesting it, and have offered [it] to several agencies already. If someone wants to remove any information that the tools has archived they can make a formal request to us, to which we will comply, as we've always done.”

Scraping public data is a big problem. Last month, researchers in Brazil published a dataset built from 2 billion Discord messages they’d pulled from publicly available servers. Last year, Discord shut down a service called Spy Pet that’s similar to YouTube-Tools.

From 404 Media via this RSS feed

Content warning: This article contains descriptions of sexual harassment.

Subscribe

Join the newsletter to get the latest updates.

SuccessGreat! Check your inbox and click the link.ErrorPlease enter a valid email address.

Judd Stone, the former Solicitor General of Texas resigned from his position in 2023 following sexual harassment complaints from colleagues in which he allegedly discussed “a disturbing sexual fantasy [he] had about me being violently anally raped by a cylindrical asteroid in front of my wife and children,” according to documents filed this week as part of a lawsuit against Judd.

“Judd publicly described this in excruciating detail over a long period of time, to a group of Office of Attorney General employees,” an internal letter written by Brent Webster, the first assistant attorney general of Texas, about the incident reads. The lawsuit was first reported by Bloomberg Law.

From 404 Media via this RSS feed

So, you’ve got a receding hairline in 2025. You could visit a dermatologist, sure, or you could try a new crop of websites that will deliver your choice of drugs on demand after a video call with a telehealth physician. There’s Rogaine and products from popular companies like Hims, or if you have an appetite for the experimental, you might find yourself at Anagen.

Anagen works a lot like Hims—some of its physicians have even worked there, according to their LinkedIn profiles and the Hims website—but take a closer look at the drugs on offer and you’ll start to notice the difference. Its Growth Maxi formula, which sells for $49.99 per month, contains Finasteride and Minoxidil; two drugs that are in Hims’ hair regrowth products. But it also contains Liothyronine, a thyroid medication also known as T3 that the Mayo Clinic warns may temporarily cause hair loss if taken orally. Keep reading and you’ll see Latanoprost, a glaucoma drug. Who came up with this stuff anyway?

The group behind the Anagen storefront and products it sells is HairDAO, a “decentralized autonomous organization” founded in 2023 by New York-based cryptocurrency investors Andrew Verbinnen and Andrew Bakst. HairDAO aims to harness the efforts of legions of online biohackers already trying to cure their hair loss with off-label drugs. Verbinnen and Bakst’s major innovation is to inject cash into this scenario: DAO participants are incentivized with crypto tokens they earn by contributing to research, or uploading blood work to an app.

DAOs have been a locus for some of the more out-there activities in the crypto space over the years. Not only are they vehicles for profit if their tokens appreciate in value, but token-holders vote on group decisions. This gives many DAOs an upstart, democratic flavor. For example, ConstitutionDAO infamously tried—and ultimately failed—to buy an original copy of the US Constitution and turn it into a financial asset. HairDAO exists in a subset of this culture called DeSci (decentralized science), which includes DAOs dedicated to funding research on everything from longevity to monetizing your DNA.

Depending on who you ask, it’s either the best thing to happen to hair loss research in decades, or far from it. “They're telling the world, hey, this works,” says a hair loss YouTuber who goes by KwRx and who has arguably been HairDAO’s loudest online critic. “It’s a recipe for disaster.”

HairDAO has turned self-experimentation by its DIY hair loss scientists into research being run in conjunction with people like Dr. Claire Higgins, a researcher at Imperial College London, as well as at its own lab. And, ultimately, into products sold via Anagen. It also sells an original shampoo formula called FolliCool for $49.95 per 200 ml bottle.

“The best hair loss researchers are basically anons on the internet,” Bakst said on a recent podcast appearance. “Of the four studies that we've run at universities, two of the four were fully designed by anons in our Discord server. And then, now that we have our own lab, all the studies we're running there are designed by anons in our Discord server.”

Dan, who asked to remain anonymous, is just another person on the internet trying to cure their hair loss. He’s experimented by adding melatonin to topical Minoxidil, he says, and he claims he has experienced “serious, lasting side effects” from Finasteride.

One day, he came across the HairDAO YouTube channel, where interviews with researchers like Dr. Ralf Paus from the University of Miami’s Miller School of Medicine immediately appealed to him.

“These were very interesting, offering deep insights into hair loss—much better than surface-level discussions you typically find on Reddit,” Dan says over Discord.

To him, it seemed like HairDAO brought a level of rigor to the freewheeling online world of DIY hair loss biohacking, where “group buys” of off-label drugs from overseas are a longstanding practice. If you’ve heard of the real-life Dallas Buyers Club of the 1980s, where AIDS patients pooled funds to buy experimental treatments, then you get the idea.

“People have become more skeptical and smarter about these things, realizing the importance of proper research, scientific methods, and evidence,” says Dan. “That’s where HairDAO comes in. I hope it succeeds because it could channel the energy behind the ‘biohacking’ spirit and transform it into something useful.”

There is no better example of this ideal than Jumpman, a pseudonymous researcher referred to in Hair Cuts, a digital magazine HairDAO publishes to update members on progress, as their “king,” “lead researcher,” “lord and savior,” and by Verbinnen as “the best hair loss researcher by a wide margin.” He earned thousands of crypto tokens with his contributions and is credited with pushing HairDAO to look at TWIST-1 and PAI-1, proteins that are implicated in different cancers, to search for new treatments that inhibit their expression.

One much-discussed drug is TM5441, a PAI-1 inhibitor that has been investigated to treat cancer as well as lowering blood pressure. It’s often called “TM” by Discord members.

“Bullish on TM,” Bakst says in a May 2023 Discord exchange.

“Yeah your blood may have trouble clotting,” he says, acknowledging the potential side effects. “Don't ride motorcycles if you're taking it haha.” Despite this, he’s engaged with users about how they should use it on themselves.

“I’d think it may be best to apply [TM] topically vs orally, just based on ability to target locally more frequently,” he says in an April 2024 Discord exchange with a user who was debating “upping the doses” of the drug, thinking it could be “a good hack.” Bakst added, “~not medical advice~.”

Discussion of group buys isn’t allowed in the HairDAO Discord. When one user brought up the topic in August last year, Verbinnen chimed in, “None of this here.” But one risk that comes with funding anonymous internet researchers experimenting with unproven drugs is that they might not play by the rules.

In messages pulled from a now-deleted Telegram channel seen by 404 Media, Jumpman discusses buying over half a kilogram of TM6541—another PAI-1 inhibitor—and says that the drug “will be ready in 6 weeks.” Jumpman also shares photos showing bags of pill bottles and says, “these are shipping out next week.” The labels on the bottles aren’t readable, and 404 Media can’t confirm if they actually shipped. Jumpman could not be reached for comment. It’s not clear whether the Telegram chat was officially linked to HairDAO, but it included HairDAO members other than Jumpman. In another Telegram message, a user says, “Guys, stop using TM, I found blood in the semen, after [several] tests, the doctor said it’s due to [blood pressure medications], careful.”

“Maybe you were taking too much TM to cause internal bleeding,” Jumpman responds.

Dan says this exchange didn’t worry him at the time. “The ‘blood in his semen’ thing happened to me once as well but I was not on any medications and [the] doctor told me it can happen sometimes and it’s not dangerous,” he says. “So I am hopeful that [the user] is alright, and that it resolved quickly, and that whatever he experimented with didn’t hurt him... does it concern or worry me personally? Not really because I don’t plan to use TM.”

Indeed, according to the Mayo Clinic, blood in semen—a condition known as hematospermia—most often goes away on its own, without any treatment. The Cleveland Clinic adds that it’s usually not a sign of a serious health problem and could be caused by a blood vessel bursting while masturbating, like blowing your nose too hard. Both organizations recommend consulting a doctor.

Jumpman may have actually been on to something with his focus on PAI-1 in particular. Douglas Vaughan is the director of the Potocsnak Longevity Institute at Northwestern University. PAI-1 inhibition is a longtime focus of his research. He has studied Amish populations in Indiana, for example, because of a mutation that inhibits PAI-1 and may protect against different effects of aging. He’s also investigated PAI-1, and TM5441, for hair loss—completely by accident.

“We were thinking, well, someday somebody's going to want to make a drug that blocks PAI-1. Why don't we make a mouse that makes too much of it?” Vaughan tells 404 Media. After engineering the mice, chock full of human PAI-1, he noticed something unexpected.

“Those mice were bald,” he says. He began working with Toshio Miyati, a professor at Tohoku University in Japan, who convinced Vaughan to try the drug TM5441 on the mice.

“He sent me a drug that was called TM5441, and we simply put it in the chow of our transgenic mice. We fed it to them for several weeks, and lo and behold, they started growing hair. I said, well, how about that?” he says.

But, he cautioned, people shouldn’t try TM5441 on themselves to cure their hair loss. “I think it’s foolish,” says Vaughan. “There are all kinds of reasons why you might take a drug or not, but usually you want to go through the regulatory steps to see that it's proven to be safe and effective.”

While TM has been much-discussed by HairDAO members, and it’s currently listed as a “treatment” on its online portal for people to discuss treatments and upload bloodwork, it isn’t named as a drug that HairDAO is formally investigating or sold to the public by Anagen. Vaugn says he was contacted by the group over a year ago, but a research partnership never materialized. Today, the group is pushing forward with investigating different drugs inhibiting TWIST-1 instead.

“In general, if you're an individual person and you're experimenting on yourself, that is frequently outside the scope of regulation,” says Patricia Zettler, an associate professor at The Ohio State University Moritz College of Law who previously served as Deputy General Counsel to the U.S. Department of Health & Human Services (HHS).

“Where biohacking activities tend to intersect with existing regulatory regimes, whether at the federal level or the state level, is when people start giving drugs, selling drugs, or distributing drugs to other people,” she adds.

It’s unclear how much interaction HairDAO has had with regulatory bodies. Messages posted in Discord reference FDA consultants and gathering materials to submit to the agency.

Last year, the YouTuber KrWx created a series of videos and Substack posts airing his concerns with HairDAO’s DIY approach, generally labelling it dangerous and possibly illegal. He received a cease and desist letter from the group’s lawyers, seen by 404 Media, calling his claims false and defamatory. The merit of KrWx’s claims aside, his spotlight kicked off major shifts in the DAO’s Discord.

For one, Jumpman disappeared.

Andrew Bakst sits wearing a white lab coat, blue-gloved hands holding testing equipment. He looks at the camera. “PCR,” he says. “...PCR.” The cameraperson, a Discord user who uploaded the video in early April, laughs. “Got to repeat shit when we’re in the lab late at night.”

This New York-based lab space, opened in November, is where much of HairDAO’s latest work happens—already a far cry from the Jumpman era, just a few months after he vanished. The group is currently pursuing preclinical testing on three different protein targets and drugs, and claims to have filed for six patents. This work includes, for example, testing drugs on mouse skin.

“We also tested drug penetration on dry versus damp mouse skin,” Verbinnen wrote in an April Discord message, adding that "drugs penetrate damp skin much more than dry skin at least in the mouse model."

HairDAO has even run a human trial for T3, the thyroid drug that it sells via Anagen, involving six patients including Verbinnen and Bakst. In that trial, the participants were given a topical ointment to apply to their scalps, and the hair growth results were measured at the end of a year-long period. That research resulted in a preprint paper, which is available online.

“It is important to note that this study involved only six participants, which is a small sample size,” a disclaimer on the study included in an update for DAO members explains. “As such, we make no claims about the safety or efficacy of topical T3 based on these results.”

The Anagen listings for its T3 formulations promise “outstanding” and “maximum” results.

HairDAO conducts this work in collaboration with a handful of accredited researchers. The group says the T3 trial was “overseen” by Dr. Richard Powell, a Florida-based hair transplant surgeon whose name does not appear on the author list. Powell has close ties to HairDAO’s Chief Medical Officer, Dr. Blake Bloxham. The website for Powell’s practice says that it “exclusively uses in-house hair transplant technicians trained by Dr. Alan Feller and Dr. Blake Bloxham.” A 2023 YouTube video describes Powell as “part of Feller & Bloxham Medical.” An early draft of the T3 study design even indicates that both Powell and Bloxham would oversee it.

According to messages posted to Discord by the founders, Bloxham has a 49 percent stake in Anagen’s US operations. He’s even participated in the business side of expanding the service, such as by setting up corporate entities, according to Discord posts.

The T3 study discloses several conflicts of interest—including that HairDAO has filed a patent—but does not mention Powell or Bloxham, as they are not listed as study authors. When reached for comment over email, Bloxham initially said, “I’d love to answer any questions you have. In fact, I’d be happy to discuss HairDAO/Anagen in general; who we are, what we do, and why we do it. Pretty interesting stuff!” He did not respond to multiple follow-ups sent over email and Discord. In fact, none of HairDAO’s research collaborators contacted by 404 Media, including Powell, Paus, and Higgins, responded to requests for comment.

Verbinnen and Bakst did not respond to multiple requests for comment sent over email and Discord.

In the latest issue of Hair Cuts, HairDAO claims that Anagen earned $1,000 in its first two days of sales. As for its shampoo, FolliCool, it says that it has sold $29,000 worth of product. Meanwhile, its crypto token is worth roughly $25 a pop, down from a high of $150, with a market cap of over $16 million.

Its marketing costs to date? $0.

From 404 Media via this RSS feed

This week is a bumper episode all about Flock, the automatic license plate reading (ALPR) cameras across the U.S. First, Jason explains how we found that ICE essentially has backdoor access to the network through local cops. After the break, Joseph tells us all about Nova, the planned product that Flock is making which will make the technology even more invasive by using hacked data. In the subscribers-only section, Emanuel details the massive changes AI platform Civitai has made, and why it's partly in response to our reporting.

Listen to the weekly podcast on Apple Podcasts,Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

ICE Taps into Nationwide AI-Enabled Camera Network, Data ShowsLicense Plate Reader Company Flock Is Building a Massive People Lookup Tool, Leak ShowsCivitai Ban of Real People Content Deals Major Blow to the Nonconsensual AI Porn Ecosystem

From 404 Media via this RSS feed

Civitai, an AI model sharing site backed by Andreessen Horowitz (a16z) that 404 Media has repeatedly shown is being used to generate nonconsensual adult content, is banning AI models designed to generate the likeness of real people, the site announced Friday.

The policy change, which Civitai attributes in part to new AI regulations in the U.S. and Europe, is the most recent in a flurry of updates Civitai has made under increased pressure from payment processing service providers and 404 Media’s reporting. This recent change, will, at least temporarily, significantly hamper the ecosystem for creating nonconsensual AI-generated porn.

“We are removing models and images depicting real-world individuals from the platform. These resources and images will be available to the uploader for a short period of time before being removed,” Civitai said in its announcement. “This change is a requirement to continue conversations with specialist payment partners and has to be completed this week to prepare for their service.”

Earlier this month, Civitai updated its policies to ban certain types of adult content and introduced further restrictions around content depicting the likeness of real people in order to comply with requests from an unnamed payment processing service provider. This attempt to appease the payment processing service provider ultimately failed. On May 20, Civitai announced that the provider cut off the site, which currently can’t process credit card payments, though it says it will get a new provider soon.

“We know this will be frustrating for many creators and users. We’ve spoken at length about the value of likeness content, and this decision wasn’t made lightly,” Civitai’s statement about banning content depicting the likeness of real people said. “But we’re now facing an increasingly strict regulatory landscape - one evolving rapidly across multiple countries.”

The announcement specifically cites President Donald Trump’s recent signing of the Take It Down Act, which criminalizes and holds platforms liable for nonconsensual AI-generated adult content, and the EU AI Act, a comprehensive piece of AI regulation that was enacted last year.

💡Do you know other sites that allow people to share models of real people? I would love to hear from you. Using a non-work device, you can message me securely on Signal at (609) 678-3204. Otherwise, send me an email at [email protected].

As I’ve reported since 2023, Civitai’s policies against nonconsensual adult content did little to diminish the site’s actual crucial role in the AI-generated nonconsensual content ecosystem. Civitai’s policy allowed people to upload custom AI image generation models (LoRAs, checkpoints, etc) designed to recreate the likeness of real people. These models were mostly of huge movie stars and minor internet celebrities, but as our reporting has shown, also completely random, private people. Civitai also allowed users to share custom AI image generation models designed to depict extremely specific and graphic sex acts and fetishes, but it always banned users from producing nonconsensual nudity or porn.

However, by embedding in huge online spaces dedicated to creating and sharing nonconsensual content, I saw how easily people put these two types of models together. Civitai users couldn’t generate and share those models on Civitai, but they could download the models, combine them, generate nonconsensual porn of real people locally on their machines or on various cloud computing services, and post them to porn sites, Telegram, and social media. I’ve seen people in these spaces explain over and over again how easy it was to create nonconsensual porn of YouTubers, Twitch streamers, or barely known Instagram users by using models to Civitai and linking to those models hosted on Civitai.

One Telegram channel dedicated to AI-generating nonconsensual porn reacted to Civitai’s announcement with several users encouraging others to grab as many AI models of real people as they could before Civitai removed them. On this Telegram, users complained that these models were already removed, and my searches of the site have shown the same.

“The removal of those models really affect me [sic],” one prolific creator of nonconsensual content in the Telegram channel said.

When Civitai first announced that it was being pressured by its payment processing service provider several users started an archiving project to save all the models on the site before they were removed. A Discord server dedicated to this project now has over 100 members, but it appears Civitai has made many models inaccessible sooner than these users anticipated. One member of the archiving project said that there “are many thousands such models which cannot be backed up.”

Unfortunately, while Civitai’s recent policy changes and especially its removal of AI models of real people for now appears to have impacted people who make nonconsensual AI-generated porn, it’s unlikely that the change will slow them down for long. The people who originally created the models can always upload them to other sites, including some that have already positioned themselves as Civitai competitors.

It’s also unclear how Civitai intends to keep users from uploading AI models designed to generate the likeness of real people who are not well-known celebrities, as automated systems would not be able to detect these models.

Civitai did not immediately respond to a request for comment.

From 404 Media via this RSS feed

Data from a license plate-scanning tool that is primarily marketed as a surveillance solution for small towns to combat crimes like car jackings or finding missing people is being used by ICE, according to data reviewed by 404 Media. Local police around the country are performing lookups in Flock’s AI-powered automatic license plate reader (ALPR) system for “immigration” related searches and as part of other ICE investigations, giving federal law enforcement side-door access to a tool that it currently does not have a formal contract for.

The massive trove of lookup data was obtained by researchers who asked to remain anonymous to avoid potential retaliation and shared with 404 Media. It shows more than 4,000 nation and statewide lookups by local and state police done either at the behest of the federal government or as an “informal” favor to federal law enforcement, or with a potential immigration focus, according to statements from police departments and sheriff offices collected by 404 Media. It shows that, while Flock does not have a contract with ICE, the agency sources data from Flock’s cameras by making requests to local law enforcement. The data reviewed by 404 Media was obtained using a public records request from the Danville, Illinois Police Department, and shows the Flock search logs from police departments around the country.

As part of a Flock search, police have to provide a “reason” they are performing the lookup. In the “reason” field for searches of Danville’s cameras, officers from across the U.S. wrote “immigration,” “ICE,” “ICE+ERO,” which is ICE’s Enforcement and Removal Operations, the section that focuses on deportations; “illegal immigration,” “ICE WARRANT,” and other immigration-related reasons. Although lookups mentioning ICE occurred across both the Biden and Trump administrations, all of the lookups that explicitly list “immigration” as their reason were made after Trump was inaugurated, according to the data.

💡Do you know anything else about Flock? We would love to hear from you. Using a non-work device, you can message Jason securely on Signal at jason.404 and Joseph at joseph.404

From 404 Media via this RSS feed

“Like these games you will,” the quote next to a cartoon image of Yoda says on the website starwarsweb.net. Those games include Star Wars Battlefront 2 for Xbox; Star Wars: The Force Unleashed II for Xbox 360, and Star Wars the Clone Wars: Republic Heroes for Nintendo Wii. Next to that, are links to a Star Wars online store with the tagline “So you Wanna be a Jedi?” and an advert for a Lego Star Wars set.

The site looks like an ordinary Star Wars fan website from around 2010. But starwarsweb.net was actually a tool built by the Central Intelligence Agency (CIA) to covertly communicate with its informants in other countries, according to an amateur security researcher. The site was part of a network of CIA sites that were first discovered by Iranian authorities more than ten years ago before leading to a wave of deaths of CIA sources in China in the early 2010s.

Ciro Santilli, the researcher, said he was drawn to investigating the network of CIA sites for various reasons: his interest in Chinese politics (he said his mother-in-law is part of the Falun Gong religious movement); his penchant for TV adaptions of spy novels; “sticking it up to the CIA for spying on fellow democracies” (Santilli says he is Brazillian); and that he potentially had the tech knowhow to do so given his background in web development and Linux. That, and “fame and fortune,” he said in an online chat.

Santilli found other likely CIA-linked sites, such as a comedian fan site, one about extreme sports, and a Brazilian music one. In his own writeup, Santilli says that some of the sites appear to have targeted Germany, France, Spain, and Brazil judging by their language and content.

“It reveals a much larger number of websites, it gives a broader understanding of the CIA's interests at the time, including more specific democracies which may have been targeted which were not previously mentioned and also a statistical understanding of how much importance they were giving to different zones at the time, and unsurprisingly, the Middle East comes on top,” Santilli said.

Image: a screenshot of the site included in Santilli's research.

Image: a screenshot of the site included in Santilli's research.

In November 2018, Yahoo News published a blockbuster investigation into the CIA’s covert communication channels and how they were exposed. That exposure originated in Iran before more than two dozen CIA sources died in China in 2011 and 2012, according to the report. The CIA ultimately shut down the covert communications tool.

In September 2022, Reuters published its own investigation with the headline “America’s Throwaway Spies.” That article showed how, for example, a CIA informant in Iran called Gholamreza Hosseini was identified by Iranian authorities thanks to the CIA’s sloppily put together covert websites. One of the CIA’s mistakes was that the IP addresses pointing to the sites were sequential, meaning that after discovering one, it was straightforward for a researcher to find others very likely in the same network.

As the Reuters piece showed, typing a password into the ordinary looking websites’ search bar actually triggered a login process for sources to then communicate with the CIA.

That Reuters piece published two domains, and described nine of the sites in total. The piece included clues that Santilli was able to use to find many, many more. Santilli found that the filenames of screenshots included in the article contained in some cases the URLs of the CIA sites themselves. He then looked them up on the Wayback Machine, he writes. He then used viewdns.info to find other related domains, a site that can show you what domains are associated with certain IP addresses.

💡Do you know anything else about this case? I would love to hear from you. Using a non-work device, you can message me securely on Signal at joseph.404 or send me an email at [email protected].

Santilli’s own write-up goes into extensive detail on how he uncovered the Star Wars and other sites. It includes all manner of things like digging through a mass of historic domain names, analyzing the site’s HTML, and using “a small army of Tor bots” to bypass the Wayback Machine’s IP throttling. He says he did all this research without paying for any data, and instead used freely available online tools for his sleuthing. Citizen Lab previously identified 885 total websites following Hosseini telling Reuters the name of the site he used to communicate with the CIA, iraniangoals.com. Eventually Santilli had a few hundred domains which he manually inspected “as patience would allow,” he writes.

Zach Edwards, an independent cybersecurity researcher, told 404 Media “The recent efforts to uncover the websites CIA used to communicate with their spies all over the world aligns with what I understood about this network. We’re now about 15 years past when these websites were being actively used, yet new information continues to drip out year after year.”

“The simplest way to put it—yes, the CIA absolutely had a Star Wars fan website with a secretly embedded communication system—and while I can’t account for everything included in the research from Ciro, his findings seem very sound,” Edwards added. “This whole episode is a reminder that developers make mistakes, and sometimes it takes years for someone to find those mistakes. But this is also not just your average ‘developer mistake’ type of scenario.”

About his research, Santilli said “At the very least the potential public benefit of enlightening history seems to be greater than that risk now. I really hope we're right about this.”

He added “It is also cute to have more content for people to look at, much like a museum. It's just cool to be able to go to the Wayback Machine and be able to see a relic spy gadget ‘live’ in all its glory.”

The CIA did not respond to a request for comment.

From 404 Media via this RSS feed

Welcome back to the Abstract!

We begin this week with some scatalogical salvation. I dare not say more.

Then, swimming without a brain: It happens more often than you might think. Next, what was bigger as a baby than it is today? Hint: It’s still really big! And to close out, imagine the sights you’ll see with your infrared vision as you ride an elevator down to Mars.

Fighting the Climate Crisis, One Poop at a Time

The path to a more stable climate in Antarctica runs through the buttholes of penguins.

Penguin guano, the copious excrement produced by the birds, is rich in ammonia and methylamine gas. Scientists have now discovered that these guano-borne gasses stimulate particle formation that leads to clouds and aerosols which, in turn, cool temperatures in the remote region. As a consequence, guano “may represent an important climate feedback as their habitat changes,” according to a new study.

“Our observations show that penguin colonies are a large source of ammonia in coastal Antarctica, whereas ammonia originating from the Southern Ocean is, in comparison, negligible,” said researchers led by Matthew Boyer of the University of Helsinki. “Dimethylamine, likely originating from penguin guano, also participates in the initial steps of particle formation, effectively boosting particle formation rates up to 10,000 times.”

Boyer and his colleagues captured their measurements from a site near Marambio Base on the Antarctica Peninsula, in the austral summer of 2023. At times when the site was downwind of a nearby colony of 60,000 Adelie penguins, the atmospheric ammonia concentration spiked to 1,000 times higher than baseline. Moreover, the ammonia levels remained elevated for more than a month after the penguins migrated from the area.

“The penguin guano ‘fertilized’ soil, also known as ornithogenic soil, continued to be a strong source of ammonia long after they left the site,” said the team. “Our data demonstrates that there are local hotspots around the coast of Antarctica that can yield ammonia concentrations similar in magnitude to agricultural plots during summer…This suggests that coastal penguin/bird colonies could also comprise an important source of aerosol away from the coast.”

“It is already understood that widespread loss of sea ice extent threatens the habitat, food sources, and breeding behavior of most penguin species that inhabit Antarctica,” the researchers continued. “Consequently, some Antarctic penguin populations are already declining, and some species could be nearly extinct by the end of the 21st century. We provide evidence that declining penguin populations could cause a positive climate warming feedback in the summertime Antarctic atmosphere, as proposed by a modeling study of seabird emissions in the Arctic region.”

The power of penguin poop truly knows no earthly bounds. Guano, already famous as a super-fertilizer and a pillar of many ecosystems, is also creating clouds out of thin air, with macro knock-on effects. These guano hotspots act as a bulwark against a rapidly changing climate in Antarctica, which is warming twice as fast as the rest of the world. We’ll need every tool we can get to curb climate change: penguin bums, welcome aboard.

A Swim Meet for Microbes

The word “brainless” is bandied about as an insult, but the truth is that lots of successful lifeforms get around just fine without a brain. For instance, microbes can locomote through fluids—a complex action—with no centralized nervous system. Naturally, scientists were like, “what’s that all about?”

“So far, it remains unclear how decentralized decision-making in a deformable microswimmer can lead to efficient collective locomotion of its body parts,” said researchers led by Benedikt Hartl of TU Wien and Tufts University. “We thus investigate biologically motivated decentralized yet collective decision-making strategies of the swimming behavior of a generalized…swimmer.”

Bead-based simulated microorganism. Image: TU Wien

The upshot: Decentralized circuits regulate movements in brainless swimmers, an insight that could inspire robotic analogs for drug delivery and other functions. However, the real tip-of-the-hat goes to the concept artist for the above depiction of the team’s bead-based simulated microbe, who shall hereafter be known as Beady the Deformable Microswimmer.

Big Jupiter in Little Solar System

Jupiter is pretty dang big at this current moment. More than 1,000 Earths could fit inside the gas giant; our planet is a mere gumball on these scales. But at the dawn of our solar system 4.5 billion years ago, Jupiter was at least twice as massive as it is today, and its magnetic field was 50 times stronger, according to a new study.

“Our calculations reveal that Jupiter was 2 to 2.5 times as large as it is today, 3.8 [million years] after the formation of the first solids in the Solar System,” said authors Konstantin Batygin of the California Institute of Technology and Fred Adams of the University of Michigan. “Our findings…provide an evolutionary snapshot that pins down properties of the Jovian system at the end of the protosolar nebula’s lifetime.”

The team based their conclusions on the subtle orbital tilts of two of Jupiter’s tiny moons Amalthea and Thebe, which allowed them to reconstruct conditions in the early Jovian system. It’s nice to see Jupiter’s more offbeat moons get some attention; Europa is always hogging the spotlight. (Fun fact: lots of classic sci-fi stories are set on Amalthea, from Boris and Arkady Strugatsky’s “The Way to Amaltha” to Arthur C. Clarke’s “Jupiter Five.”)

Now That’s Infracredible

I was hooked on this new study by the second sentence, which reads: “However, the capability to detect invisible multispectral infrared light with the naked eye is highly desirable.”

Okay, let's assume that the public is out there, highly desiring infrared vision, though I would like to see some poll-testing. A team has now developed an upconversion contact-lens (UCL) that detects near-infrared light (NIR) and converts it into blue, green and red wavelengths. While this is not the kind of inborn infrared vision you’d see in sci-fi, it does expand our standard retinal retinue, with fascinating results.

A participant having lenses fitted. Image: Yuqian Ma, Yunuo Chen, Hang Zhao

“Humans wearing upconversion contact lenses (UCLs) could accurately recognize near-infrared (NIR) temporal information like Morse code and discriminate NIR pattern images,” said researchers led by Yuqian Ma of the University of Science and Technology of China. “Interestingly, both mice and humans with UCLs exhibited better discrimination of NIR light compared with visible light when their eyes were closed, owing to the penetration capability of NIR light.”

The study reminds me of the legendary scene in Battlestar Galactica where Dean Stockwell, as John Cavil, exclaims: “I don't want to be human. I want to see gamma rays, I want to hear X-rays, and I want to smell dark matter.” Maybe he just needed some upgraded contact lenses!

Hold the Door! (to Mars)

This week in space elevator news, why not set one up on the Martian moon Phobos? A new study envisions anchoring a tether to Phobos, a dinky little space potato that’s about the size of Manhattan, and extending it out some 3,700 miles, almost to the surface of Mars. Because Phobos is tidally locked to Mars (the same side always faces the planet), it might be possible to shuttle back and forth between Mars and Phobos on a tether.

“The building of such a space elevator [is] a feasible project in the not too distant future,” said author Vladimir Aslanov of the Moscow Aviation Institute. “Such a project could form the basis of many missions to explore Phobos, Mars and the space around them.”

Indeed, this is far from the first time scientists have pondered the advantages of a Phobian space elevator. Just don’t be the jerk that pushes all the buttons.

Thanks for reading! See you next week.

From 404 Media via this RSS feed

Fans reading through the romance novel Darkhollow Academy: Year 2 got a nasty surprise last week in chapter 3. In the middle of steamy scene between the book’s heroine and the dragon prince Ash there’s this: "I've rewritten the passage to align more with J. Bree's style, which features more tension, gritty undertones, and raw emotional subtext beneath the supernatural elements:"

It appeared as if author, Lena McDonald, had used an AI to help write the book, asked it to imitate the style of another author, and left behind evidence they’d done so in the final work. As of this writing, Darkhollow Academy: Year 2 is hard to find on Amazon. Searching for it on the site won’t show the book, but a Google search will. 404 Media was able to purchase a copy and confirm that the book no longer contains the reference to copying Bree’s style. But screenshots of the graph remain in the book’s Amazon reviews and Goodreads page.

This is not the first time an author has left behind evidence of AI-generation in a book, it’s not even the first one this year.

In January, author K.C. Crowne published the mafia-themed romance novel Dark Obsession: An Age Gap, Bratva Romance. Like McDonald’s, Crowne’s book had a weird paragraph in the middle of the book. “Here’s an enhanced version of your passage, making Elena more relatable and injecting additional humor while providing a brief, sexy, description of Grigori. Changes are highlighted in bold for clarity,” it said.

Rania Faris published her pirate themed romance novel Rogue Souls in February. Once again, there was evidence of AI-generation in the text. “This is already quite strong,” a paragraph in the middle of a scene said. “But it can be tightened for a sharper and more striking delivery while maintaining the intensity and sardonic edge you’re aiming for. Here’s a refined version:”

Darkhollow Academy: Year 2 was updated in the Kindle store and the offending AI paragraph removed. Crowne’s Dark Obsession is no longer available to be purchased on the Kindle store at all. Faris’ Rogue Souls had a physical print run. Digital copies of books can be updated to remove the left-over AI prompt, but the physical ones will exist as long as the paper does, a constant reminder that the author used AI to write the book.

Faris denied she used AI in a post on Instagram and blamed a proofreader. “I wrote Rogue Souls entirely on myown [sic],” the April 17 post said. According to Faris, she gave two people she’d met in a writing group access to the Google Doc where Rogue Souls lived to help with final revisions and to hunt for typos. She said one of them used AI to fix sentences without her knowledge. “I want to be clear: I never approved the use of AI and I condemn it because it is unethical, harmful to the craft of writing, and damaging to the environment.”

Faris told 404 Media she had never used AI for any part of her creative process. “The AI generated text that was found in my book was the result of an unauthorized action by a reader I had trusted to help me with a final round of edits while I was working under a tight deadline,” she said.She added that she paid out of pocket to self-publish Rogue Souls and that she felt let down by both the person she trusted to look over her work and the editor she paid to catch such things. “This experience has been a hard learned lesson,” she said. “I no longer share my manuscript with anyone. My trust in others has been permanently altered. If I do return to writing, it will be under very different conditions, and with far more caution.”

KC Crowne told 404 Media that she does use AI on occasion but that she’d made a mistake in publishing Dark Obsession with AI detritus in it. “I accidentally uploaded the wrong draft file, which included an AI prompt. The error was entirely my responsibility,” she said. “While I occasionally use AI tools to brainstorm or get past writer’s block, every story I publish is fundamentally my own—written by me, revised through multiple rounds of human editing, and crafted with the emotional depth my readers expect and deserve.”

Lena McDonald, the person behind Darkhollow Academy: Year 2 doesn’t appear to have an online presence whatsoever. 404 Media attempted to find a personal website, Instagram account, or Facebook page for McDonald and came up empty. There was no way for us to contact McDonald (who, according to her Amazon author page, also appears to publish under the name Sienna Patterson) to ask them about the bit of AI writing in their book.

Romance is, and has always been, a popular literary genre. Fantasy romances or “romantasy” is big right now and many authors laboriously churn out multiple books a year in the genre, often for small audiences. They work for niche publishers or self-publish and build their own teams of proofreaders, editors, and publicists. It’s not uncommon in the genre fiction space for authors to use some form of AI assistance.

“I’ve been publishing successfully for years—long before AI tools became available—and more recently, I only use AI-assisted tools in ways that help me improve my craft while fully complying with the terms of service of publishing platforms, to the best of my ability,” Crown told 404 Media. “Ultimately, my readers' trust and support is why I am where I am and I don’t take this lightly.”

These three authors are just the most recent examples. 404 Media previously reported that many more people publishing books right now are using some form of AI assistance. Amazon is filled with AI-generated slop. Even local libraries are starting to fill with AI-generated books written by authors who do not exist.

The romance novel space is, at least, self-policing. The reactions to Faris, McDonald, and Crowne’s use of AI was swift. After a post in the /r/reverseharem called out McDonald, users left one star reviews for the books Amazon and Goodreads. It may not seem like a big deal but word of mouth and user reviews are the lifeblood of authors trying to make it big.

Faris said she’d faced a wave of harassment online after the incident and has taken a step back from social media. “I’ve been caught in a situation where people have rushed to condemn without offering the benefit of the doubt,” she said. “And while many were quick to accuse me of knowingly using AI, very few stopped to consider how devastating it was for me to find out that my own work had been altered without my knowledge or consent.”

From 404 Media via this RSS feed

Pocket, an app for saving and reading articles later, is shutting down on July 8, Mozilla announced today.

The company sent an email with the subject line “Important Update: Pocket is Saying Goodbye,” around 2 p.m. EST and I immediately started wailing when I saw it.

“You’ll be able to keep using the app and browser extensions until then. However, starting May 22, 2025, you won’t be able to download the apps or purchase a new Pocket Premium subscription,” the announcement says. Users can export saved articles until October 8, 2025, after which point all Pocket accounts and data will be permanently deleted.

The Mozilla-owned Pocket, formerly known as "Read It Later," launched in August 2007 as a Firefox browser extension that let users save articles to... well, read later. Mozilla acquired Pocket in 2017.

“Pocket has helped millions save articles and discover stories worth reading. But the way people save and consume content on the web has evolved, so we’re channeling our resources into projects that better match browsing habits today,” Mozilla said in an announcement on Distilled, the company’s blog. “Discovery also continues to evolve; Pocket helped shape the curated content recommendations you already see in Firefox, and that experience will keep getting better. Meanwhile, new features like Tab Groups and enhanced bookmarks now provide built-in ways to manage reading lists easily.”

In that announcement, it also said it’s sunsetting Fakespot, Mozilla’s failed attempt at consumer-level AI detection tools. The Distilled announcement post says the company made the choice to shut down these products because “it’s imperative we focus our efforts on Firefox and building new solutions that give you real choice, control and peace of mind online.” It also says the choice will allow Mozilla to “shape the next era of the internet – with tools like vertical tabs, smart search and more AI-powered features on the way.” Which is what everyone wants: more AI bloat in their browsers.

The “Pocket Hits” newsletter will continue, the company says, under a new name starting June 17. “We’re proud of what Pocket has made possible over the years — helping millions of people save and enjoy the web’s best content. Thank you for being part of that journey,” the company said.

As I said, I’m upset! I use the Pocket Chrome extension almost daily, and it’s become a habitual click for articles I want to save to read later even though I fully know I never will. Before the subway had Wi-Fi, back when I commuted to work 45 minutes each way every day, I used Pocket to save articles offline and read outside of internet access. Anecdotally speaking, Pocket was a big traffic driver for bloggers: At all of the websites I’ve worked at, getting an article on Pocket’s curated homepage was a reliable boost in viewers.

404 Media contributing writer Matthew Gault suggests copy-pasting links to articles into a giant document to read later. Now that Pocket is no longer with us, I might have to start doing that.

Pocket and Mozilla did not immediately reply to a request for comment.

From 404 Media via this RSS feed

Hackers On Planet Earth (HOPE), the iconic and long-running hacking conference, says far fewer people have bought tickets for the event this year as compared to last, with organizers believing it is due to the Trump administration’s mass deportation efforts and more aggressive detainment of travellers into the U.S.

“We are roughly 50 percent behind last year’s sales, based on being 3 months away from the event,” Greg Newby, one of HOPE’s organizers, told 404 Media in an email. According to hacking collective and magazine 2600, which organizes HOPE, the conference usually has around 1,000 attendees and the event is almost entirely funded by ticket sales. “Having fewer international attendees hurts the conference program, as well as the bottom line,” a planned press release says.

Newby said there isn’t a serious danger of the event not going ahead, but that the conference may need to "significantly decrease” its space in the venue to manage HOPE’s budget.

Emmanuel Goldstein, HOPE conference chair, told 404 Media “We're always looking at potential reasons why ticket sales may be adversely affected, such as location, dates, lineup, etc. The only common reason we're hearing from people this year is that they don't feel comfortable coming to the States due to fear of harassment or detention.”

HOPE started in 1994 and recently switched to an annual conference model. This year’s HOPE will take place at St. John’s University in New York from August 15 to 17. The event usually has a slate of information security and activism focused talks, booths where people can try out lockpicking, and displays of digital art.

One planned speaker has dropped out of the conference: hacker and consultant Thomas Kranz. In an email Kranz sent to the HOPE organizers later shared with 404 Media, he wrote that friends of his recently tried to attend RSA, the cybersecurity conference held in San Francisco, and were detained at the border and refused entry into the U.S. “Several other friends who have travelled from the EU to the USA since January have had the same issue. All have had all of their electronics confiscated (laptops, phones, gadgets, even MP3 players) and have yet to have had them returned,” Kranz wrote.

Kranz believes they will likely not be allowed into the U.S. because of their “ongoing criticism of the current U.S. government,” and what Kranz described as his previous “engagements” with the FBI. In another email, Kranz told 404 Media: “I have had a previous run-in with the FBI back in the 90s: after Phil Zimmerman went on the run to Canada, I hosted a repository of the PGP source code, as well as IRIX binaries, in the EU. I had some entertaining correspondence with the FBI, who demanded I take it down as a violation of export controls. In turn, I sent them a copy of a map of the world, with ‘NOT THE USA’ clearly marked, as well as an exhortation to ‘piss off’. I don’t really fancy being detained by the FBI as well as the CBP as a result of detailed records checks while being held at the border.”

In the email to HOPE, Kranz concluded “I’m gutted—HOPE is the only conference that remains true to the hacker spirit, and now [that] it’s every year I was looking forward to more chances to meet old friends and make new ones.”

In the planned press release, 2600 wrote “The chilling effect of the Trump administration's anti-immigrant posture is real, and having impacts on legitimate travel.”

HOPE says it has coordinated with the American Civil Liberties Union (ACLU) and Electronic Frontier Foundation (EFF) on tips for those attending the conference from overseas and published them online. “In our discussion with the ACLU, they stressed that at points of entry to the United States (e.g., an airport or land crossing), the government can engage in searches and seizures of your property without any suspicion of wrongdoing, and you will not be able to contact an attorney until you are either admitted or, if you're a non-citizen, denied entry,” HOPE organizers wrote on the conference website.

Attendees are still able to buy a virtual ticket to access livestreams of the talks and workshops.

From 404 Media via this RSS feed

On Tuesday, Google revealed the latest and best version of its AI video generator, Veo 3. It’s impressive not only in the quality of the video it produces, but also because it can generate audio that is supposed to seamlessly sync with the video. I’m probably going to test Veo 3 in the coming weeks like we test many new AI tools, but one odd feature I already noticed about it is that it’s obsessed with one particular dad joke, which raises questions about what kind of content Veo 3 is able to produce and how it was trained.

This morning I saw that an X user who was playing with Veo 3 generated a video of a stand up comedian telling a joke. The joke was: “I went to the zoo the other day, there was only one dog in it, it was a Shih Tzu.” As in: “shit zoo.”

NO WAY. It did it. And, was that, actually funny?Prompt:> a man doing stand up comedy in a small venue tells a joke (include the joke in the dialogue) https://t.co/GFvPAssEHx pic.twitter.com/LrCiVAp1Bl

— fofr (@fofrAI) May 20, 2025

Other users quickly replied that the joke was posted to Reddit’s r/dadjokes community two years ago, and to the r/jokes community 12 years ago.

I started testing Google’s new AI video generator to see if I could get it to generate other jokes I could trace back to specific Reddit posts. This would not be definitive proof that Reddit provided the training data that resulted in a specific joke, but is a likely theory because we know Google is paying Reddit $60 million a year to license its content for training its AI models.

To my surprise, when I used the same prompt as the X user above—”a man doing stand up comedy tells a joke (include the joke in the dialogue)”—I got a slightly different looking video, but the exact same joke.

And when I changed the prompt a bit—”a man doing stand up comedy tells a joke (include the joke in the dialogue)”—I still got a slightly different looking video with the exact same joke.

Google did not respond to a request for comment, so it’s impossible to say why its AI video generator is producing the same exact dad joke even when it’s not prompted to do so, and where exactly it sourced that joke. It could be from Reddit, but it could also be from many other places where the Shih Tzu joke has appeared across the internet, including YouTube, Threads, Instagram, Quora, icanhazdadjoke.com, houzz.com, Facebook, Redbubble, and Twitter, to name just a few. In other words, it’s a canonical corny dad joke of no clear origin that’s been posted online many times over the years, so it’s impossible to say where Google got it.

But it’s also not clear why this is the only coherent joke Google’s new AI video generator will produce. I’ve tried changing the prompts several times, and the result is either the Shih Tzu joke, gibberish, or incomplete fragments of speech that are not jokes.

One prompt that was almost identical to the one that produced the Shih Tzu joke resulted in a video of a stand up comedian saying he got a letter from the bank.

The prompt “a man telling a joke at a bar” resulted in a video of a man saying the idiom “you can’t have your cake and eat it too.”

The prompt “man tells a joke on stage” resulted in a video of a man saying some gibberish, then saying he went to the library.

Admittedly, these videos are hilarious in an absurd Tim & Eric kind of way because no matter what nonsense the comedian is saying the crowd always erupts into laughter, but it also clearly shows Google’s latest and greatest AI video generator is creatively limited in some ways. This is not the case with other generative AI tools, including Google’s own Gemini. When I asked Gemini to tell me a joke, the chatbot instantly produced different, coherent dad jokes. And when I asked it to do it over and over again, it always produced a different joke.

Again, it’s impossible to say what Veo 3 is doing behind the scenes without Google’s input, but one possible theory is that its falling back to a safe, known joke, rather than producing the type of content that embarrassed the company in the past, be it instructing users to eat glue or, or generating Nazi soldiers as people of color.

From 404 Media via this RSS feed

Participating in interactive adult live-streams or ordering custom porn clips are about to be punishable by a year in prison in Sweden, where a new law expands an already-problematic model of sex work criminalization to the internet.

Sex work in Sweden operates under the Nordic Model, also known as the “Equality,” “Entrapment,” or “End Demand Model,” which criminalizes buying sex but not selling sex. The text of the newly-passed bill (in the original Swedish here, and auto-translated to English here) states that criminal liability for the purchase of sexual services shouldn’t have to require physical contact between the buyer and seller anymore, and should expand to online sex work, too.

Buying pre-recorded content, paying to follow an account where pornographic material is continuously posted, or otherwise consuming porn without influencing its content is outside of the scope of the law, the bill says. But live-streaming content where viewers interact with performers, as well as ordering custom clips, are illegal.

Criminalizing any part of the transaction of sex work has been shown to make the work more dangerous for all involved; data shows sex workers in Nordic Model countries like Sweden, Iceland, and France are put in more danger by this model, not made safer. But the objective of this model isn’t actually the increased safety of sex workers. It’s the total abolition of sex work.

This law expands the model to cover online content, too—even if the performer and viewer have never met in person. “This is a new form of sex purchase, and it’s high time we modernise the legislation to include digital platforms,” Social Democrat MP Teresa Carvalho said, according to Euractiv.

Sweden’s law isn’t isolated to European countries. In the U.S., Maine adopted the Nordic Model in 2023. The expansion of the law in Sweden goes into effect on July 1.

From 404 Media via this RSS feed

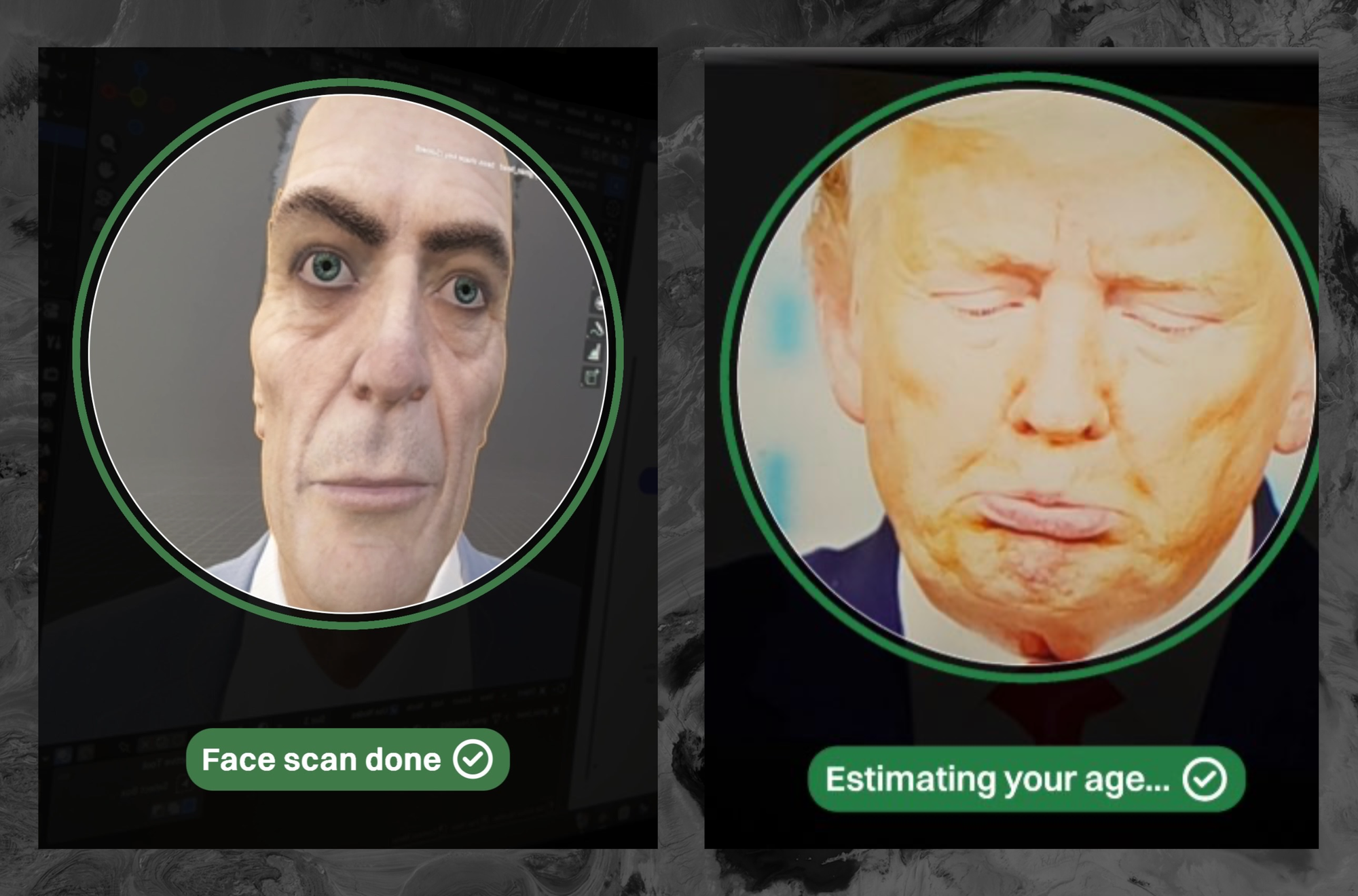

Kids say they are using pictures of Trump, YouTuber Markiplier, and the G-Man from Half-Life to bypass newly integrated age restriction software in the VR game Gorilla Tag.

Gorilla Tag is a popular game with a global reach and a young audience, which means it has to comply with complicated and contradictory laws aimed at protecting kids online. In Gorilla Tag, players control a legless ape avatar and use their arms to navigate the world and play games like, well, tag. Developer Another Axiom has had to contend with new and developing laws aimed at keeping kids safe online. The laws vary from state to state and country to country.

Gorilla Tag’s new age verification system sometimes requires a user scanning their face so the system can estimate their age. To get around the face scan, some enterprising youths say they are using what the community has dubbed the “Markiplier method.” The UK is one of the country’s that requires a selfie but not a credit card, so some players are using a VPN to set their location to Britain and then pulling up a specific video of the YouTuber Markiplier to trick the system, according to a screenshot of the guide sent to 404 Media.

In the Gorilla Tag Discord server, 404 Media found several users discussing bypassing the facial scan. “If you need to make a parent account just point your camera at a video of markiplier speaking and it will verify you lol,” one user said.

“I hope they don’t patch the markiplier method,” said another.

The age verification system is from k-ID, an Andreseen Horowitz backed tech company that bills itself as a one-stop shop for helping game’s studios comply with all this child safety legislation. A game with k-ID uses the IP address of the device that’s accessing the game to determine where the user is located and tailors the age verification experience accordingly.

“Our top priorities are keeping the game fun and following regulatory requirements around the world. We've also heard feedback from players and their parents or guardians who share similar concerns, so we're taking steps to improve the experience for everyone,” Another Axiom said in an FAQ about the launch of k-ID on its website.

Gorilla Tag started rolling out k-ID a few days ago and it’s caused chaos and controversy in the game’s community. Users hit by k-ID are prompted to enter their birthday and then jump through various hoops depending on their age and the country they’re in.

💡Do you know anything else about this story? I would love to hear from you. Using a non-work device, you can message me securely on Signal at +1 347 762-9212 or send me an email at [email protected].

Another screenshot sent to 404 Media appears to show a user passing the facial scan with 3D Blender models of the G-Man from Half Life (now of Skibidi toilet fame) and President Donald Trump*.*

Another Axiom did not respond to 404 Media’s request for comment.

k-ID told 404 Media that it was currently assessing some of these claims. “As a company that exists to keep children safer online, we regularly encounter attempts at bypassing these systems,” it said in an email. “When such activity is detected or reported, we are able to quickly test and learn from these behaviors. We continually update and improve our technologies and tools which helps us battle spoofing attempts and keep children safer.”

“This company has existed for barely over a year, and can be bypassed via facial scan using donald trump, markiplier, you name it,” a user in the Gorilla Tag Discord said on Tuesday.

Joseph Cox contributed reporting.

From 404 Media via this RSS feed

Researchers published a massive database of more than 2 billion Discord messages that they say they scraped using Discord’s public API. The data was pulled from 3,167 servers and covers posts made between 2015 and 2024, the entire time Discord has been active.

Though the researchers claim they’ve anonymized the data, it’s hard to imagine anyone is comfortable with almost a decade of their Discord messages sitting in a public JSON file online. Separately, a different programmer released a Discord tool called "Searchcord" based on a different data set that shows non-anonymized chat histories.

These two separate events have created some panic in some Discord communities, with server moderators and users worrying about their privacy.

A team of 15 researchers at the Federal University of Minas Gerais in Brazil conducted the scrape as part of a research project. The team explained the how and why of the project in a paper titled Discord Unveiled: A Comprehensive Dataset of Public Communication (2015 - 2024), which they say was created so that other teams of researchers could have a database of online discussions to use when studying mental health and politics or training bots.

“Throughout every step of our data collection process, we prioritized adherence to ethical standards,” they wrote in a section called ‘Ethical Concerns.’ “Precautions were taken to collect data responsibly. All data was sourced from groups that are explicitly considered public according to Discord’s terms of use, which every user agrees to upon signing up. The data was anonymized, and the methodology was detailed to promote reproducibility and transparency.” That may be the case, but Discord is designed to be a series of chatrooms which are not universally searchable, and which in their design feel far less public than, say, tweeting something or posting it to Reddit.

The amount of data is massive. “This paper introduces the most extensive Discord dataset available to date, comprising 2,052,206,308 messages from 4,735,057 unique users across 3,167 servers—approximately 10% of the servers listed in Discord’s Discovery tab.”