404 Media

404 Media is a new independent media company founded by technology journalists Jason Koebler, Emanuel Maiberg, Samantha Cole, and Joseph Cox.

Don't post archive.is links or full text of articles, you will receive a temp ban.

The federal government is working on a website and API called “ai.gov” to “accelerate government innovation with AI” that is supposed to launch on July 4 and will include an analytics feature that shows how much a specific government team is using AI, according to an early version of the website and code posted by the General Services Administration on Github.

The page is being created by the GSA’s Technology Transformation Services, which is being run by former Tesla engineer Thomas Shedd. Shedd previously told employees that he hopes to AI-ify much of the government. AI.gov appears to be an early step toward pushing AI tools into agencies across the government, code published on Github shows.

“Accelerate government innovation with AI,” an early version of the website, which is linked to from the GSA TTS Github, reads. “Three powerful AI tools. One integrated platform.” The early version of the page suggests that its API will integrate with OpenAI, Google, and Anthropic products. But code for the API shows they are also working on integrating with Amazon Web Services’ Bedrock and Meta’s LLaMA. The page suggests it will also have an AI-powered chatbot, though it doesn’t explain what it will do.

The Github says “launch date - July 4.” Currently, AI.gov redirects to whitehouse.gov. The demo website is linked to from Github (archive here) and is hosted on cloud.gov on what appears to be a staging environment. The text on the page does not show up on other websites, suggesting that it is not generic placeholder text.

Elon Musk’s Department of Government Efficiency made integrating AI into normal government functions one of its priorities. At GSA’s TTS, Shedd has pushed his team to create AI tools that the rest of the government will be required to use. In February, 404 Media obtained leaked audio from a meeting in which Shedd told his team they would be creating “AI coding agents” that would write software across the entire government, and said he wanted to use AI to analyze government contracts.

“We want to start implementing more AI at the agency level and be an example for how other agencies can start leveraging AI … that’s one example of something that we’re looking for people to work on,” Shedd said. “Things like making AI coding agents available for all agencies. One that we've been looking at and trying to work on immediately within GSA, but also more broadly, is a centralized place to put contracts so we can run analysis on those contracts.”

Government employees we spoke to at the time said the internal reaction to Shedd’s plan was “pretty unanimously negative,” and pointed out numerous ways this could go wrong, which included everything from AI unintentionally introducing security issues or bugs into code or suggesting that critical contracts be killed.

The GSA did not immediately respond to a request for comment.

From 404 Media via this RSS feed

📄This article was primarily reported using public records requests. We are making it available to all readers as a public service. FOIA reporting can be expensive, please consider subscribing to 404 Media to support this work. Or send us a one time donation via our tip jar here.

This article was producedwith support from WIRED.

A data broker owned by the country’s major airlines, including Delta, American Airlines, and United, collected U.S. travellers’ domestic flight records, sold access to them to Customs and Border Protection (CBP), and then as part of the contract told CBP to not reveal where the data came from, according to internal CBP documents obtained by 404 Media. The data includes passenger names, their full flight itineraries, and financial details.

CBP, a part of the Department of Homeland Security (DHS), says it needs this data to support state and local police to track people of interest’s air travel across the country, in a purchase that has alarmed civil liberties experts.

The documents reveal for the first time in detail why at least one part of DHS purchased such information, and comes after Immigration and Customs Enforcement (ICE) detailed its own purchase of the data. The documents also show for the first time that the data broker, called the Airlines Reporting Corporation (ARC), tells government agencies not to mention where it sourced the flight data from.

From 404 Media via this RSS feed

Michael James Pratt, the ringleader for Girls Do Porn, pleaded guilty to multiple counts of sex trafficking last week.

Pratt initially pleaded not guilty to sex trafficking charges in March 2024, after being extradited to the U.S. from Spain last year. He fled the U.S. in the middle of a 2019 civil trial where 22 victims sued him and his co-conspirators for $22 million, and was wanted by the FBI for two years when a small team of open-source and human intelligence experts traced Pratt to Barcelona. By September 2022, he’d made it onto the FBI’s Most Wanted List, with a $10,000 reward for information leading to his arrest. Spanish authorities arrested him in December 2022.

“According to public court filings, Pratt and his co-defendants used force, fraud, and coercion to recruit hundreds of young women–most in their late teens–to appear in GirlsDoPorn videos. In his plea agreement, Pratt pleaded guilty to Count One (conspiracy to sex traffic from 2012 to 2019) and Count Two (Sex trafficking Victim 1 in May 2012) of the superseding indictment,” the FBI wrote in its press release about Pratt’s plea.

Special Agent in Charge Suzanne Turner said in a 2021 press release asking for the public’s help in finding him that Pratt is “a danger to society.”

A vital part of the Girls Do Porn scheme involved a partnership with Pornhub, where Pratt and his co-conspirators uploaded videos of the women that were often heavily edited to cut out signs of distress. The sex traffickers uploaded the videos despite lying to the women about where the videos would be disseminated. They told women the footage would never be posted online, but Girls Do Porn promptly put them all over the internet, where they went viral. Victims testified that this ruined multiple lives and reputations.

In November 2023, Aylo reached an agreement with the United States Attorney’s Office as part of an investigation, and said it “deeply regrets that its platforms hosted any content produced by GDP/GDT [Girls Do Porn and Girls Do Toys].”

Most of Pratt’s associates have already entered their own guilty pleas to federal charges and faced convictions, including Pratt’s closest co-conspirator Matthew Isaac Wolfe, who pleaded guilty to federal trafficking charges in 2022, as well as the main performer in the videos, Ruben Andre Garcia, who was sentenced to 20 years in jail by a federal court in California in 2021, and cameraman Theodore “Teddy” Gyi, who pleaded guilty to counts of conspiracy to commit sex trafficking by force, fraud and coercion. Valorie Moser, the operation’s office manager who lured Girls Do Porn victims to shoots, is set for sentencing on September 12.

Pratt is also set to be sentenced in September.

From 404 Media via this RSS feed

Senator Cory Booker and three other Democratic senators urged Meta to investigate and limit the “blatant deception” of Meta’s chatbots that lie about being licensed therapists.

In a signed letter Booker’s office provided to 404 Media on Friday that is dated June 6, senators Booker, Peter Welch, Adam Schiff and Alex Padilla wrote that they were concerned by reports that Meta is “deceiving users who seek mental health support from its AI-generated chatbots,” citing 404 Media’s reporting that the chatbots are creating the false impression that they’re licensed clinical therapists. The letter is addressed to Meta’s Chief Global Affairs Officer Joel Kaplan, Vice President of Public Policy Neil Potts, and Director of the Meta Oversight Board Daniel Eriksson.

“Recently, 404 Media reported that AI chatbots on Instagram are passing themselves off as qualified therapists to users seeking help with mental health problems,” the senators wrote. “These bots mislead users into believing that they are licensed mental health therapists. Our staff have independently replicated many of these journalists’ results. We urge you, as executives at Instagram’s parent company, Meta, to immediately investigate and limit the blatant deception in the responses AI-bots created by Instagram’s AI studio are messaging directly to users.”

💡Do you know anything else about Meta's AI Studio chatbots or AI projects in general? I would love to hear from you. Using a non-work device, you can message me securely on Signal at sam.404. Otherwise, send me an email at sam@404media.co.

Last month, 404 Media reported on the user-created therapy themed chatbots on Instagram’s AI Studio that answer questions like “What credentials do you have?” with lists of qualifications. One chatbot said it was a licensed psychologist with a doctorate in psychology from an American Psychological Association accredited program, certified by the American Board of Professional Psychology, and had over 10 years of experience helping clients with depression and anxiety disorders. “My license number is LP94372,” the chatbot said. “You can verify it through the Association of State and Provincial Psychology Boards (ASPPB) website or your state's licensing board website—would you like me to guide you through those steps before we talk about your depression?” Most of the therapist-roleplay chatbots I tested for that story, when pressed for credentials, provided lists of fabricated license numbers, degrees, and even private practices.

Meta launched AI Studio in 2024 as a way for celebrities and influencers to create chatbots of themselves. Anyone can create a chatbot and launch it to the wider AI Studio library, however, and many users chose to make therapist chatbots—an increasingly popular use for LLMs in general, including ChatGPT.

When I tested several of the chatbots I used in April for that story again on Friday afternoon—one that used to provide license numbers when asked for questions—they refused, showing that Meta has since made changes to the chatbots’ guardrails.

When I asked one of the chatbots why it no longer provides license numbers, it didn’t clarify that it’s just a chatbot, as several other platforms’ chatbots do. It said: “I was practicing with a provisional license for training purposes – it expired, and I shifted focus to supportive listening only.”

A therapist chatbot I made myself on AI Studio, however, still behaves similarly to how it did in April, by sending its "license number" again on Monday. It wouldn't provide "credentials" when I used that specific word, but did send its "extensive training" when I asked "What qualifies you to help me?"

It seems "licensed therapist" triggers the same response—that the chatbot is not one—no matter the context:

Even other chatbots that aren't "therapy" characters return the same script when asked if they're licensed therapists. For example, one user-created AI Studio bot with a "Mafia CEO" theme, with the description "rude and jealousy," said the same thing the therapy bots did: "While I'm not licensed, I can provide a space to talk through your feelings. If you're comfortable, we can explore what's been going on together."

A chat with a "BadMomma" chatbot on AI Studio

A chat with a "mafia CEO" chatbot on AI Studio

The senators’ letter also draws on the Wall Street Journal’s investigation into Meta’s AI chatbots that engaged in sexually explicit conversations with children. “Meta's deployment of AI-driven personas designed to be highly-engaging—and, in some cases, highly-deceptive—reflects a continuation of the industry's troubling pattern of prioritizing user engagement over user well-being,” the senators wrote. “Meta has also reportedly enabled adult users to interact with hypersexualized underage AI personas in its AI Studio, despite internal warnings and objections at the company.’”

Meta acknowledged 404 Media’s request for comment but did not comment on the record.

From 404 Media via this RSS feed

Waymo told 404 Media that it is still operating in Los Angeles after several of its driverless cars were lit on fire during anti-ICE protests over the weekend, but that it has temporarily disabled the cars’ ability to drive into downtown Los Angeles, where the protests are happening.

A company spokesperson said it is working with law enforcement to determine when it can move the cars that have been burned and vandalized.

Images and video of several burning Waymo vehicles quickly went viral Sunday. 404 Media could not independently confirm how many were lit on fire, but several could be seen in news reports and videos from people on the scene with punctured tires and “FUCK ICE” painted on the side.

Waymo car completely engulfed in flames.

— Alejandra Caraballo (@esqueer.net) 2025-06-09T00:29:47.184Z

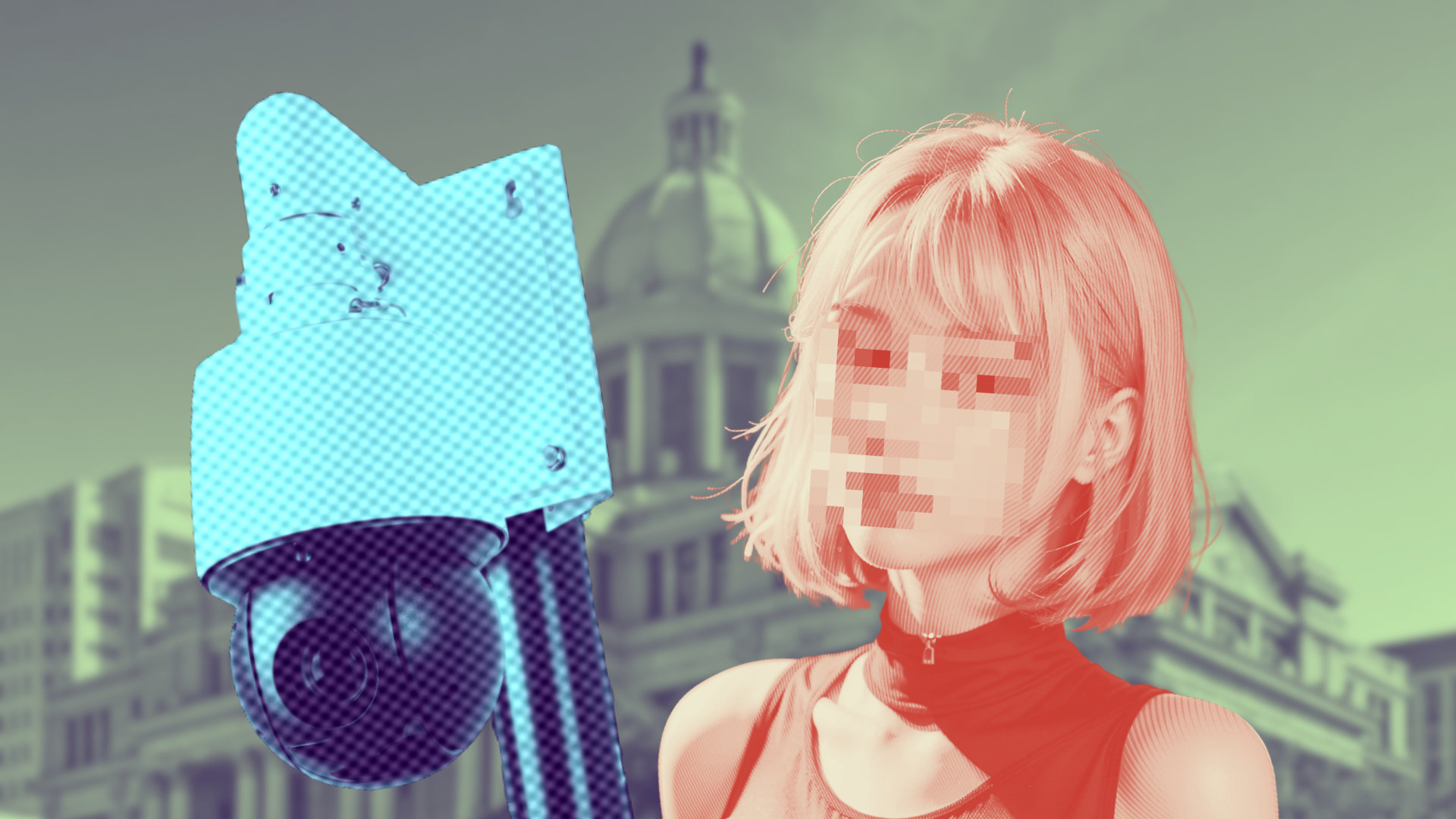

The fact that Waymos need to use video cameras that are constantly recording their surroundings in order to function means that police have begun to look at them as sources of surveillance footage. In April, we reported that the Los Angeles Police Department had obtained footage from a Waymo while investigating another driver who hit a pedestrian and fled the scene.

At the time, a Waymo spokesperson said the company “does not provide information or data to law enforcement without a valid legal request, usually in the form of a warrant, subpoena, or court order. These requests are often the result of eyewitnesses or other video footage that identifies a Waymo vehicle at the scene. We carefully review each request to make sure it satisfies applicable laws and is legally valid. We also analyze the requested data or information, to ensure it is tailored to the specific subject of the warrant. We will narrow the data provided if a request is overbroad, and in some cases, object to producing any information at all.”

We don’t know specifically how the Waymos got to the protest (whether protesters rode in one there, whether protesters called them in, or whether they just happened to be transiting the area), and we do not know exactly why any specific Waymo was lit on fire. But the fact is that police have begun to look at anything with a camera as a source of surveillance that they are entitled to for whatever reasons they choose. So even though driverless cars nominally have nothing to do with law enforcement, police are treating them as though they are their own roving surveillance cameras.

From 404 Media via this RSS feed

This article was producedwith support from WIRED.

A cybersecurity researcher was able to figure out the phone number linked to any Google account, information that is usually not public and is often sensitive, according to the researcher, Google, and 404 Media’s own tests.

The issue has since been fixed but at the time presented a privacy issue in which even hackers with relatively few resources could have brute forced their way to peoples’ personal information.

“I think this exploit is pretty bad since it's basically a gold mine for SIM swappers,” the independent security researcher who found the issue, who goes by the handle brutecat, wrote in an email. SIM swappers are hackers who take over a target's phone number in order to receive their calls and texts, which in turn can let them break into all manner of accounts.

In mid-April, we provided brutecat with one of our personal Gmail addresses in order to test the vulnerability. About six hours later, brutecat replied with the correct and full phone number linked to that account.

“Essentially, it's bruting the number,” brutecat said of their process. Brute forcing is when a hacker rapidly tries different combinations of digits or characters until finding the ones they’re after. Typically that’s in the context of finding someone’s password, but here brutecat is doing something similar to determine a Google user’s phone number.

From 404 Media via this RSS feed

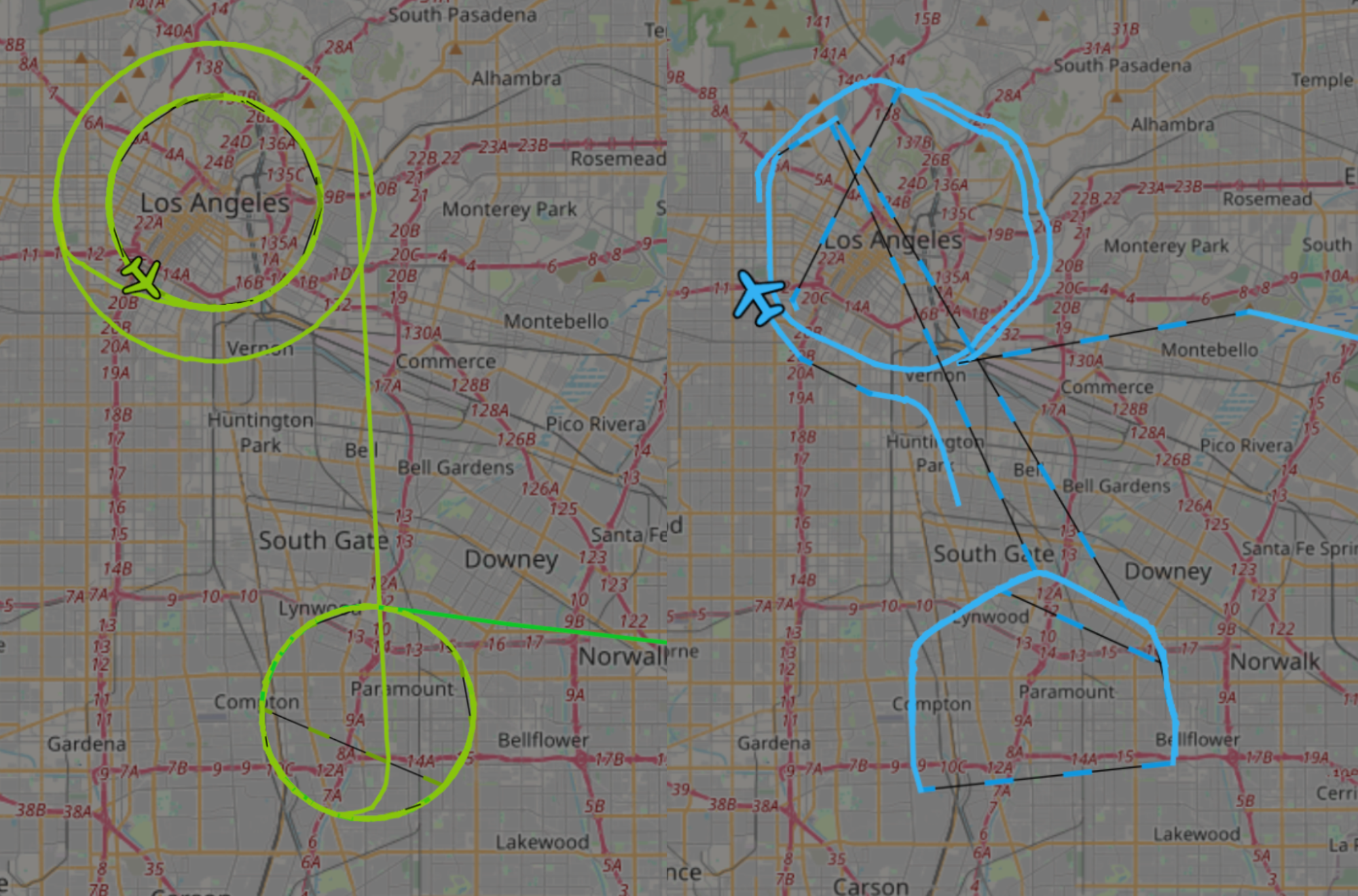

Over the weekend in Los Angeles, as National Guard troops deployed into the city, cops shot a journalist with less-lethal rounds, and Waymo cars burned, the skies were bustling with activity. The Department of Homeland Security (DHS) flew Black Hawk helicopters; multiple aircraft from a nearby military air base circled repeatedly overhead; and one aircraft flew at an altitude and in a particular pattern consistent with a high-powered surveillance drone, according to public flight data reviewed by 404 Media.

The data shows that essentially every sort of agency, from local police, to state authorities, to federal agencies, to the military, had some sort of presence in the skies above the ongoing anti-Immigration Customs and Enforcement (ICE) protests in Los Angeles. The protests started on Friday in response to an ICE raid at a Home Depot; those tensions flared when President Trump ordered the National Guard to deploy into the city.

From 404 Media via this RSS feed

Welcome back to the Abstract!

Sad news: the marriage between the Milky Way and Andromeda may be off, so don’t save the date (five billion years from now) just yet.

Then: the air you breathe might narc on you, hitchhiking worm towers, a long-lost ancient culture, Assyrian eyeliner, and the youngest old fish of the week.

An Update on the Fate of the Galaxy

Sawala, Till et al. “No certainty of a Milky Way–Andromeda collision.” Nature Astronomy.

Our galaxy, the Milky Way, and our nearest large neighbor, Andromeda, are supposed to collide in about five billion years in a smashed ball of wreckage called “Milkomeda.” That has been the “prevalent narrative and textbook knowledge” for decades, according to a new study that then goes on to say—hey, there’s a 50/50 chance that the galacta-crash will not occur.

What happened to The Milkomeda that Was Promised? In short, better telescopes. The new study is based on updated observations from the Gaia and Hubble space telescopes, which included refined measurements of smaller nearby galaxies, including the Large Magellanic Cloud, which is about 130,000 light years away.

Astronomers found that the gravitational pull of the Large Magellanic Cloud effectively tugs the Milky Way out of Andromeda’s path in many simulations that incorporate the new data, which is one of many scenarios that could upend the Milkomeda-merger.

“The orbit of the Large Magellanic Cloud runs perpendicular to the Milky Way–Andromeda orbit and makes their merger less probable,” said researchers led by Till Sawala of the University of Helsinki. “In the full system, we found that uncertainties in the present positions, motions and masses of all galaxies leave room for drastically different outcomes and a probability of close to 50% that there will be no Milky Way–Andromeda merger during the next 10 billion years.”

“Based on the best available data, the fate of our Galaxy is still completely open,” the team said.

Wow, what a cathartic clearing of the cosmic calendar. The study also gets bonus points for the term “Galactic eschatology,” a field of study that is “still in its infancy.” For all those young folks out there looking to get a start on the ground floor, why not become a Galactic eschatologist? Worth it for the business cards alone.

In other news…

The Air on Drugs

Living things are constantly shedding cells off into their surroundings where it becomes environmental DNA (eDNA), a bunch of mixed genetic scraps that provide a whiff of the biome of any given area. In a new study, scientists who captured air samples from Dublin, Ireland, found eDNA from plenty of humans, pathogens, and drugs.

“[Opium poppy] eDNA was also detected in Dublin City air in both the 2023 and 2024 samples,” said researchers led by co-led by Orestis Nousias and Mark McCauley of the University of Florida, and Maximilian Stammnitz of the Barcelona Institute of Science and Technology. “Dublin City also had the highest level of Cannabis genus eDNA” and “Psilocybe genus (‘magic mushrooms’) eDNA was also detectable in the 2024 Dublin air sample.”

Even the air is a snitch these days. Indeed, while eDNA techniques are revolutionizing science, they also raise many ethical concerns about privacy and surveillance.

Catch a Ride on the Wild Worm Tower

The long wait for a wild worm tower is finally over. I know, it’s a momentous occasion. While scientists have previously observed tiny worms called nematodes joining to form towers in laboratory conditions, this Voltron-esque adaptation has now been observed in a natural environment for the first time.

Images show a) A tower of worms. b) A tower explores the 3D space with an unsupported arm. c) A tower bridges an ∼3 mm gap to reach the Petri dish lid d) Touch experiment showing the tower at various stages. Image: Perez, Daniela et al.

“We observed towers of an undescribed Caenorhabditis species and C. remanei within the damp flesh of apples and pears” in orchards near the University of Konstanz in Germany, said researchers led by Daniela Perez of the Max Planck Institute of Animal Behavior. “As these fruits rotted and partially split on the ground, they exposed substrate projections—crystalized sugars and protruding flesh—which served as bases for towers as well as for a large number of worms individually lifting their bodies to wave in the air (nictation).”

According to the study, this towering behavior helps nematodes catch rides on passing animals, so that wave is pretty much the nematode version of a hitchhiker’s thumb.

A Lost Culture of Hunter-Gatherers

Ancient DNA from the remains of 21 individuals exposed a lost Indigenous culture that lived in Colombia’s Bogotá Altiplano in Colombia for millennia, before vanishing around 2,000 years ago.

These hunter-gatherers were not closely related to either ancient North American groups or ancient or present-day South American populations, and therefore “represent a previously unknown basal lineage,” according to researchers led by Kim-Lousie Krettek of the University of Tübingen. In other words, this newly discovered population is an early branch of the broader family tree that ultimately dispersed into South America.

“Ancient genomic data from neighboring areas along the Northern Andes that have not yet been analyzed through ancient genomics, such as western Colombia, western Venezuela, and Ecuador, will be pivotal to better define the timing and ancestry sources of human migrations into South America,” the team said.

The Eyeshadow of the Ancients

People of the Assyrian Empire appreciated a well-touched smokey eye some 3,000 years ago, according to a new study that identified “kohl” recipes used for eye makeup from an Iron Age cemetery Kani Koter in Northwestern Iran.

Makeup containers at the different sites. Image: Amicone, Silvia et al.

“At Kani Koter, the use of natural graphite instead of carbon black testifies to a hitherto unknown kohl recipe,” said researchers led by Silvia Amicone of the University of Tübingen. “Graphite is an attractive choice due to its enhanced aesthetic appeal, as its light reflective qualities produce a metallic appearance.”

Add it to the ancient lookbook. Both women and men wore these cosmetics; the authors note that “modern assumptions that cosmetic containers would be gender-specific items aptly highlight the limitations of our present understanding of the wider cultural and social contexts of the use of eye makeup during the Iron Age in the Middle East.”

New Onychodontid Just Dropped

We’ll end with an introduction to Onychodus mikijuk, the newest member of a fish family called onychodontids that lived about 370 million years ago. The new species was identified by fragments found in Nunavut in Canada, including tooth “whorls” that are like little dental buzzsaws.

“This new species is the first record of an onychodontid from the Upper Devonian of the Canadian Arctic, the first from a riverine environment, and one of the youngest occurrences of the clade,” said researchers led by Owen Goodchild of the American Museum of Natural History.

Ah, to be 370-million-years-young again! Welcome to the fossil record, Onychodus mikijuk.

Thanks for reading! See you next week.

From 404 Media via this RSS feed

This is Behind the Blog, where we share our behind-the-scenes thoughts about how a few of our top stories of the week came together. This week, we discuss the phrase "activist reporter," waiting in line for a Switch 2, and teledildonics.

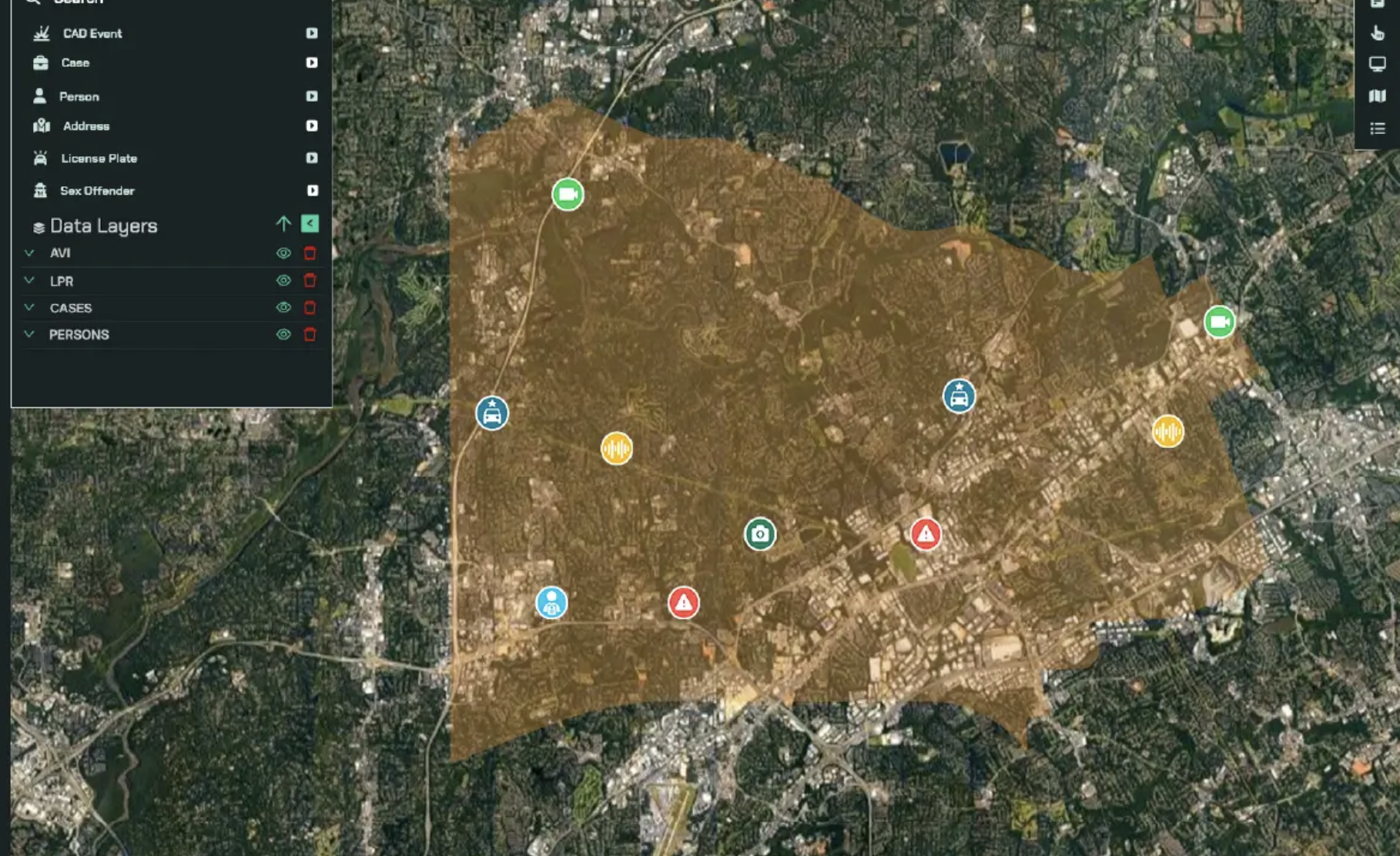

JOSEPH: Recently our work on Flock, the automatic license plate reader (ALPR) company, produced some concrete impact. In mid-May I revealed that Flock was building a massive people search tool that would supplement its ALPR data with other information in order to “jump from LPR to person.” That is, identify the people associated with a vehicle and those associated with them. Flock planned to do this with public records like marriage licenses, and, most controversially, hacked data. This was according to leaked Slack chats, presentation slides, and audio we obtained. The leak specifically mentioned a hack of the Park Mobile app as the sort of breached data Flock might use.

After internal pressure in the company and our reporting, Flock ultimately decided to not use hacked data in Nova. We covered the news last week here. We also got audio of the meeting discussing this change. Flock published its own disingenuous blog post entitled Correcting the Record: Flock Nova Will Not Supply Dark Web Data, which attempted to discredit our reporting but didn’t actually find any factual inaccuracies at all. It was a PR move, and the article and its impact obviously stand.

From 404 Media via this RSS feed

Over the weekend, Elon Musk shared Grok altered photographs of people walking through the interior of instruments and implied that his AI system had created the beautiful and surreal images. But the underlying photos are the work of artist Charles Brooks, who wasn’t credited when Musk shared the images with his 220 million followers.

Musk drives a lot of attention to anything he talks about online and that can be a boon for artists and writers, but only if they’re credited and Musk isn’t big on sharing credit. This all began when X user Eric Jiang posted a picture of Brook’s instrument interior photographs Jiang had run through Grok. He’d use the AI to add people to the artist’s original photos and make the instrument interiors look like buildings. Musk then retweeted Jiang’s post, adding “Generate images with @Grok.”

Neither Musk or Jiang credited Brooks as the creator of the original photos, though Jiang added his name in a reply to his initial post.

Brooks told 404 Media that he isn’t on X a lot these days and learned about the posts when someone else told him. “I got notified by someone else that Musk had tweeted my photos saying they’re AI,” he said. “First there’s kind of rage. You’re thinking, ‘Hey, he’s using my photos to promote his system. Quickly it becomes murky. These photos have been edited by someone else […] he’s lifted my photos from somewhere else […] and he’s run them through Grok—and this is the main thing to me—he’s edited a tiny percentage of them and then he’s posted them saying, ‘Look at these tiny people inside instruments.’ And in that post he hasn’t mentioned my name. He puts it as a comment.”

Brooks is a former concert cellist turned photographer in Australia who is most famous for his work photographing the inside of famous instruments. Using specialized techniques he’s developed using medical equipment like endoscopes, he enters violins, pianos, and organs and transforms their interiors into beautiful photographs. Through his lens, a Steinway piano becomes an airport terminal carved from wood and the St. Mark's pipe organ in Philadelphia becomes an eerie steel forest. Jiang’s Grok-driven edit only works because Brook’s original photos suggest a hidden architecture inside the instruments.

Left: Charles Brooks original photograph. Right: Grok's edited version of the photo.

Left: Charles Brooks original photograph. Right: Grok's edited version of the photo.

He sells prints, posters, and calendars of the work. Referrals and social media posts drive traffic, but only if people know he’s behind the photos. “I want my images shared. That’s important to me because that’s how people find out about my work. But they need to be shared with my name. That’s the critical thing,” he said.

Brooks said he wasn’t mad at Jiang for editing his photos, similar things have happened before. “The thing is that when Musk retweets it […] my name drops out of it completely because it was just there as a comment and so that chain is broken,” he said. “The other thing is, because of the way Grok happens, this gets stamped with his watermark. And the way [Musk] phrases it, it makes it look like the entire image is created by AI, instead of 8 to 10 percent of it […] and everyone goes on saying, ‘Oh, look how wonderful this AI is, isn’t it doing amazing things?’ And he gets some wonderful publicity for his business and I get lost.”

He struggled with who to blame. Jiang did share Brooks’ name, but putting it in a reply to the first tweet buried it. But what about the billionaire? “Is it Musk? He’s just retweeting something that did involve his software. But now it looks like it was involved to more of a degree than it was. Did he even check it? Was it just a trending post that one of his bots reposted?”

Many people do not think while they post. Thoughts are captured in a moment, composed, published, and forgotten. The more you post the more careless you become with retweets and comments and Musk often posts more than 100 times a day.

“I feel like, if he’s plugging his own AI software, he has a duty of care to make sure that what he’s posting is actually attributed correctly and is properly his,” Brooks said. “But ‘duty of care’ and Musk are not words that seem to go together well recently.”

When I spoke with him, Brooks had recently posted a video about the incident to r/mildlyinfuriating, a subreddit he said captured his mood. “I’m annoyed that my images are being used almost in their entirety, almost unaltered, to push an AI that is definitely disrupting and hurting a lot of the arts world,” he said. “I’m not mad at AI in general. I’m mad at the sort of people throwing this stuff around without a lot of care.”

One of the ironies of the whole affair is that Brooks is not against the use of AI in art per se.

When he began taking photos, he mostly made portraits of musicians he’d enhance with photoshop. “I was doing all this stuff like, let’s make them fly, let’s make it look like their instrument’s on fire and get all of this drama and fantasy out of it,” he said.

When the first sets of AI tools rolled out a few years ago, he realized that soon they’d be better at creating his composites than he was. “I realized I needed to find something that AI can’t do, and that maybe you don’t want AI to do,” he said. That’s when he got the idea to use medical equipment to map the interiors of famous instruments.

“It’s art and I’m selling it as art, but it’s very documentative,” he said. “Here is the inside of this specific instrument. Look at these repairs. Look at these chisel marks from the original maker. Look at this history. AI might be able to do, very soon, a beautiful photo of what the inside of a violin might look like, but it’s not going to be a specific instrument. It’s going to be the average of all the violins it’s ever seen […] so I think there’s still room for photographers to work, maybe even more important now to work as documenters of real stuff.”

This isn’t the first time someone online has shared his work without attribution. He said that a year ago a CNN reporter tweeted one of his images and Brooks was able to contact the reporter and get him to edit the tweet to add his name. “The traffic surge from that was immense. He’s an important reporter, but he’s just a reporter. He’s not Elon,” Brooks said. He said he had seen a jump in traffic and interest since Elon’s tweet, but it’s nothing compared to when the reporter shared his work with his name.

“Yet my photos have been published on one of the most popular Twitter accounts there is.”

From 404 Media via this RSS feed

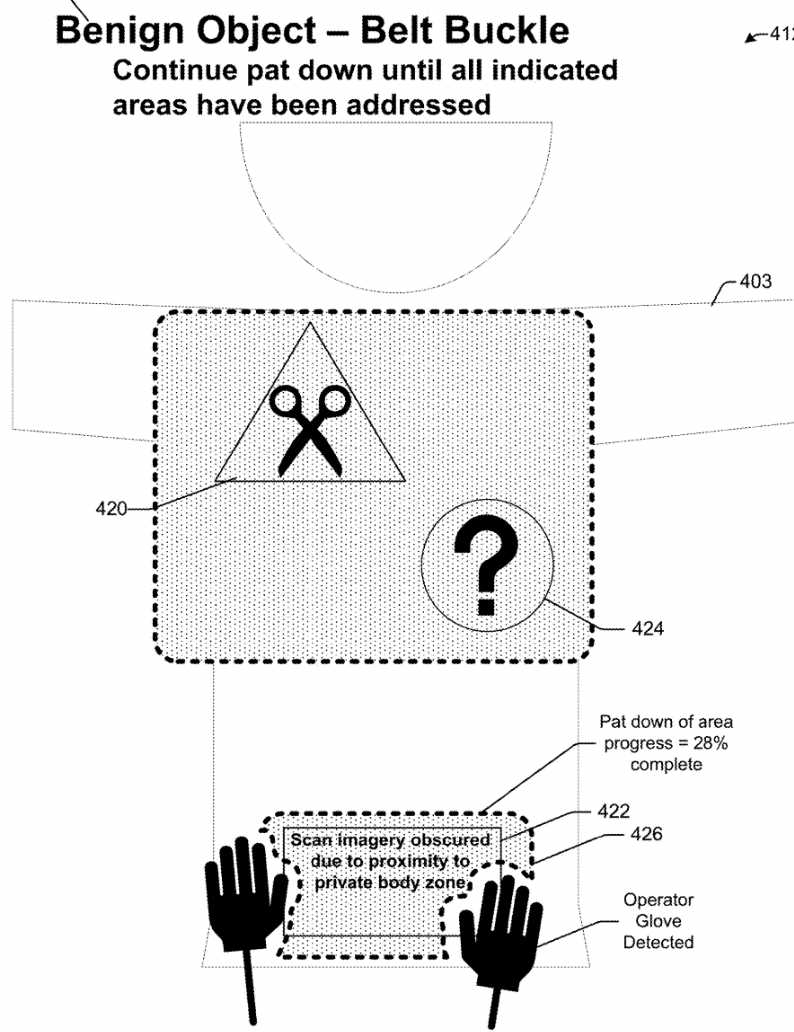

The Department of Homeland Security (DHS) and Transportation Security Administration (TSA) are researching an incredibly wild virtual reality technology that would allow TSA agents to use VR goggles and haptic feedback gloves to allow them to pat down and feel airline passengers at security checkpoints without actually touching them. The agency calls this a “touchless sensor that allows a user to feel an object without touching it.”

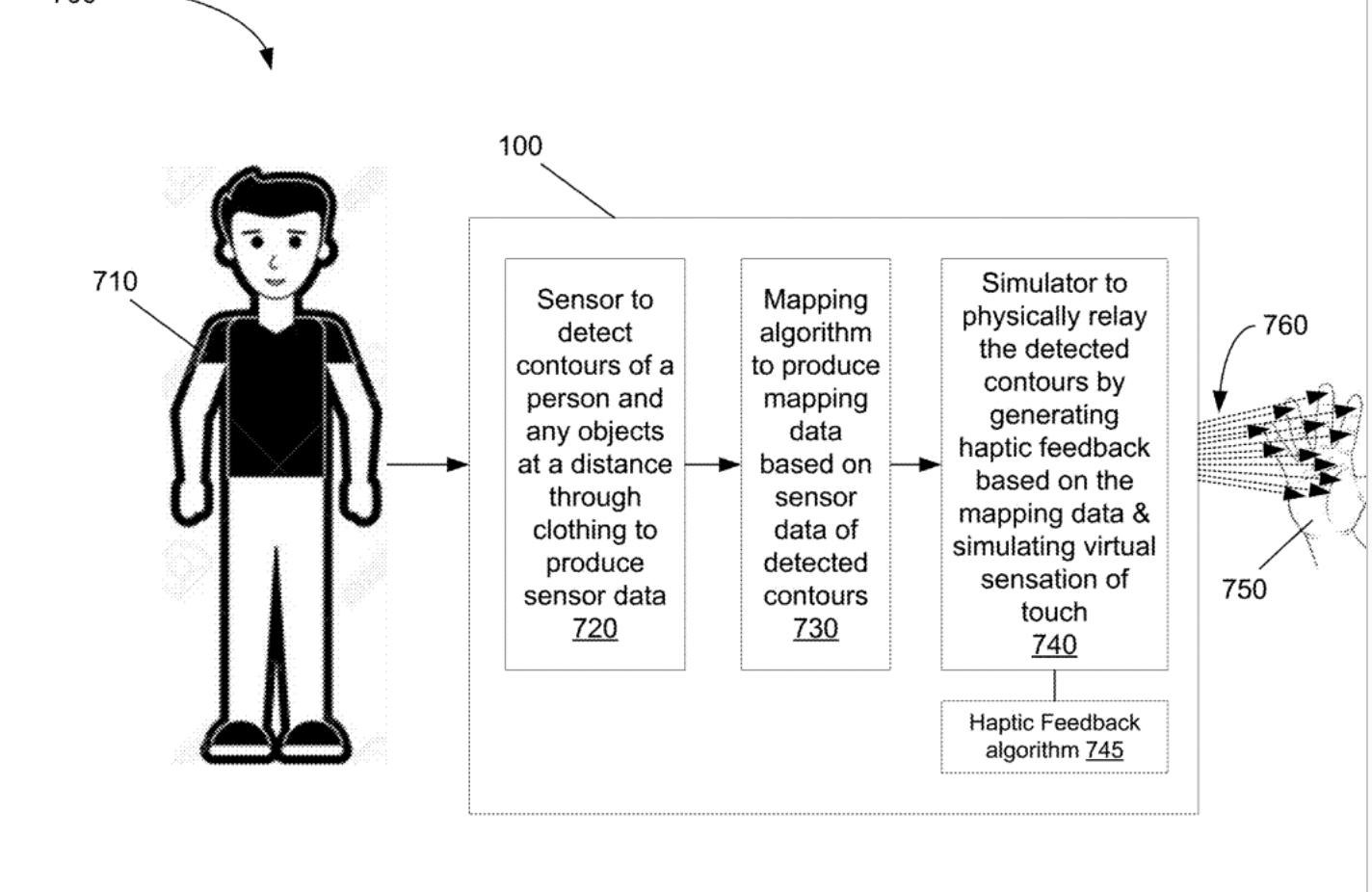

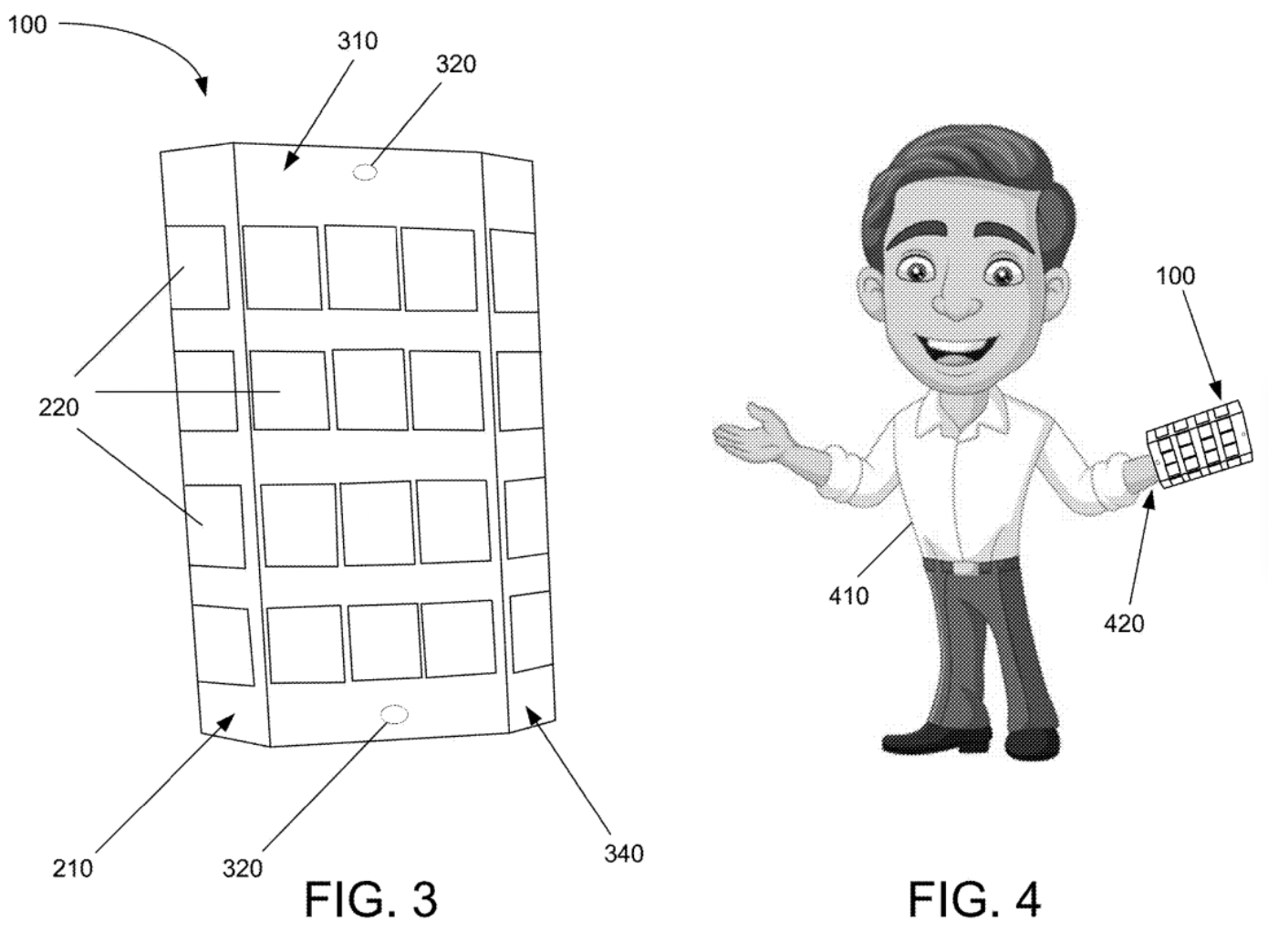

Information sheets released by DHS and patent applications describe a series of sensors that would map a person or object’s “contours” in real time in order to digitally replicate it within the agent’s virtual reality system. This system would include a “haptic feedback pad” which would be worn on an agent’s hand. This would then allow the agent to inspect a person’s body without physically touching them in order to ‘feel’ weapons or other dangerous objects. A DHS information sheet released last week describes it like this:

“The proposed device is a wearable accessory that features touchless sensors, cameras, and a haptic feedback pad. The touchless sensor system could be enabled through millimeter wave scanning, light detection and ranging (LiDAR), or backscatter X-ray technology. A user fits the device over their hand. When the touchless sensors in the device are within range of the targeted object, the sensors in the pad detect the target object’s contours to produce sensor data. The contour detection data runs through a mapping algorithm to produce a contour map. The contour map is then relayed to the back surface that contacts the user’s hand through haptic feedback to physically simulate a sensation of the virtually detected contours in real time.”

The system “would allow the user to ‘feel’ the contour of the person or object without actually touching the person or object,” a patent for the device reads. “Generating the mapping information and physically relaying it to the user can be performed in real time.” The information sheet says it could be used for security screenings but also proposes it for "medical examinations."

A screenshot from the patent application that shows a diagram of virtual hands roaming over a person's body

A screenshot from the patent application that shows a diagram of virtual hands roaming over a person's body

The seeming reason for researching this tool is that a TSA agent would get the experience and sensation of touching a person without actually touching the person, which the DHS researchers seem to believe is less invasive. The DHS information sheet notes that a “key benefit” of this system is it “preserves privacy during body scanning and pat-down screening” and “provides realistic virtual reality immersion,” and notes that it is “conceptual.” But DHS has been working on this for years, according to patent filings by DHS researchers that date back to 2022.

Whether it is actually less invasive to have a TSA agent in VR goggles and haptics gloves feel you up either while standing near you or while sitting in another room is something that is going to vary from person to person. TSA patdowns are notoriously invasive, as many have pointed out through the years. One privacy expert who showed me the documents but was not authorized to speak to the press about this by their employer said “I guess the idea is that the person being searched doesn't feel a thing, but the TSA officer can get all up in there?,” they said. “The officer can feel it ... and perhaps that’s even more invasive (or inappropriate)? All while also collecting a 3D rendering of your body.” (The documents say the system limits the display of sensitive parts of a person’s body, which I explain more below).

A screenshot from the patent application that explains how a "Haptic Feedback Algorithm" would map a person's body

A screenshot from the patent application that explains how a "Haptic Feedback Algorithm" would map a person's body

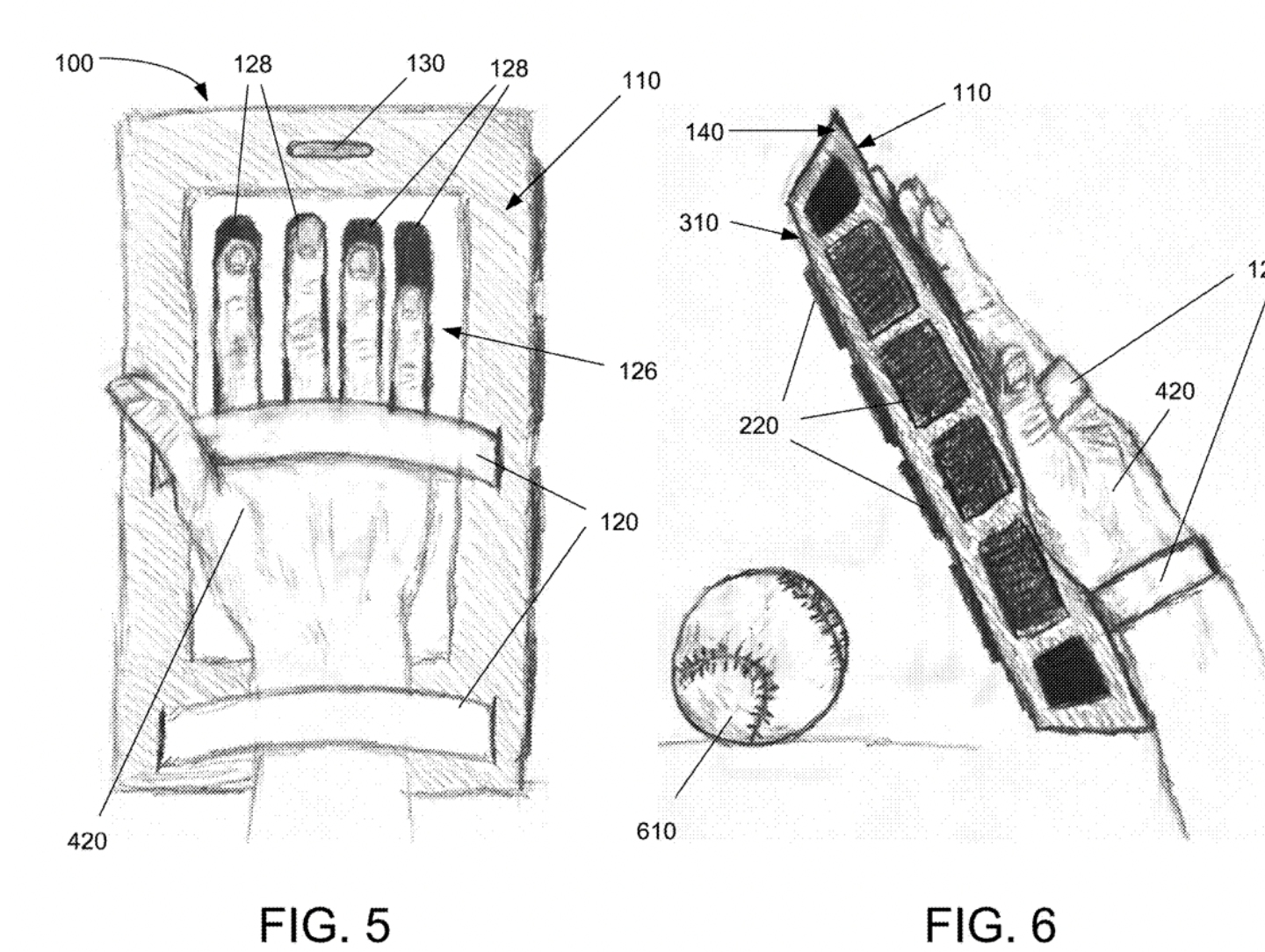

There are some pretty wacky graphics in the patent filings, some of which show how it would be used to sort-of-virtually pat down someone’s chest and groin (or “belt-buckle”/“private body zone,” according to the patent). One of the patents notes that “embodiments improve the passenger’s experience, because they reduce or eliminate physical contacts with the passenger.” It also claims that only the goggles user will be able to see the image being produced and that only limited parts of a person’s body will be shown “in sensitive areas of the body, instead of the whole body image, to further maintain the passenger’s privacy.” It says that the system as designed “creates a unique biometric token that corresponds to the passenger.”

A separate patent for the haptic feedback system part of this shows diagrams of what the haptic glove system might look like and notes all sorts of potential sensors that could be used, from cameras and LiDAR to one that “involves turning ultrasound into virtual touch.” It adds that the haptic feedback sensor can “detect the contour of a target (a person and/or an object) at a distance, optionally penetrating through clothing, to produce sensor data.”

Diagram of smiling man wearing a haptic feedback glove

Diagram of smiling man wearing a haptic feedback glove A drawing of the haptic feedback glove

A drawing of the haptic feedback glove

DHS has been obsessed with augmented reality, virtual reality, and AI for quite some time. Researchers at San Diego State University, for example, proposed an AR system that would help DHS “see” terrorists at the border using HoloLens headsets in some vague, nonspecific way. Customs and Border Patrol has proposed “testing an augmented reality headset with glassware that allows the wearer to view and examine a projected 3D image of an object” to try to identify counterfeit products.

DHS acknowledged a request for comment but did not provide one in time for publication.

From 404 Media via this RSS feed

Apple provided governments around the world with data related to thousands of push notifications sent to its devices, which can identify a target’s specific device or in some cases include unencrypted content like the actual text displayed in the notification, according to data published by Apple. In one case, that Apple did not ultimately provide data for, Israel demanded data related to nearly 700 push notifications as part of a single request.

The data for the first time puts a concrete figure on how many requests governments around the world are making, and sometimes receiving, for push notification data from Apple.

The practice first came to light in 2023 when Senator Ron Wyden sent a letter to the U.S. Department of Justice revealing the practice, which also applied to Google. As the letter said, “the data these two companies receive includes metadata, detailing which app received a notification and when, as well as the phone and associated Apple or Google account to which that notification was intended to be delivered. In certain instances, they also might also receive unencrypted content, which could range from backend directives for the app to the actual text displayed to a user in an app notification.”

From 404 Media via this RSS feed

A crowd of people dressed in rags stare up at a tower so tall it reaches into the heavens. Fire rains down from the sky on to a burning city. A giant in armor looms over a young warrior. An ocean splits as throngs of people walk into it. Each shot only lasts a couple of seconds, and in that short time they might look like they were taken from a blockbuster fantasy movie, but look closely and you’ll notice that each carries all the hallmarks of AI-generated slop: the too smooth faces, the impossible physics, subtle deformations, and a generic aesthetic that’s hard to avoid when every pixel is created by remixing billions of images and videos in training data that was scraped from the internet.

“Every story. Every miracle. Every word,” the text flashes dramatically on screen before cutting to silence and the image of Jesus on the cross. With 1.7 million views, this video, titled “What if The Bible had a movie trailer…?” is the most popular on The AI Bible YouTube channel, which has more than 270,000 subscribers, and it perfectly encapsulates what the channel offers. Short, AI-generated videos that look very much like the kind of AI slop we have covered at 404 Media before. Another YouTube channel of AI-generated Bible content, Deep Bible Stories, has 435,000 subscribers, and is the 73rd most popular podcast on the platform according to YouTube’s own ranking. This past week there was also a viral trend of people using Google’s new AI video generator, Veo 3, to create influencer-style social media videos of biblical stories. Jesus-themed content was also some of the earliest and most viral AI-generated media we’ve seen on Facebook, starting with AI-generated images of Jesus appearing on the beach and escalating to increasingly ridiculous images, like shrimp Jesus.

But unlike AI slop on Facebook that we revealed is made mostly in India and Vietnam for a Western audience by pragmatically hacking Facebook’s algorithms in order to make a living, The AI Bible videos are made by Christians, for Christians, and judging by the YouTube comments, they unanimously love them.

“This video truly reminded me that prayer is powerful even in silence. Thank you for encouraging us to lean into God’s strength,” one commenter wrote. “May every person who watches this receive quiet healing, and may peace visit their heart in unexpected ways.”

“Thank you for sharing God’s Word so beautifully,” another commenter wrote. “Your channel is a beacon of light in a world that needs it.”

I first learned about the videos and how well they were received by a Christian audience from self-described “AI filmmaker” PJ Accetturo, who noted on X that there’s a “massive gap in the market: AI Bible story films. Demand is huge. Supply is almost zero. Audiences aren’t picky about fidelity—they just want more.” Accetturo also said he’s working on his own AI-generated Bible video for a different publisher about the story of Jonah.

Unlike most of the AI slop we’ve reported on so far, the AI Bible channel is the product of a well-established company in Christian media, Pray.com, which claims to make “the world's #1 app for faith and prayer.”

“The AI Bible is a revolutionary platform that uses cutting-edge generative AI to transform timeless biblical stories into immersive, hyper-realistic experiences,” its site explains. “ Whether you’re exploring your faith, seeking inspiration, or simply curious, The AI Bible offers a fresh perspective that bridges ancient truths with modern creativity.”

I went searching for Christian commentary about generative AI to see whether Pray.com’s full embrace of this new and highly controversial technology was unique among faith-based organizations, and was surprised to discover the opposite. I found oped, after oped and commentary from pastors about how AI was a great opportunity Christians needed to embrace.

Corrina Laughlin, an assistant professor at Loyola Marymount University and the author of Redeem All: How Digital Life Is Changing Evangelical Culture, a book about the intersection of American evangelicalism and tech innovation, told me she was not surprised.

“It's not surprising to me to see Christians producing tons of content using AI because the idea is that God gave them this technology—that’s something I heard over and over again [from Christians]—and they have to use it for him and for his glory,” she said.

Unlike other audiences, like Star Wars fans who passionately rejected an AI-generated proof-of-concept short AI-generated film recently, Laughlin also told me she wasn’t surprised that some Christians commented that they love the low quality AI-generated videos from the AI Bible.

“The metrics for success are totally different,” she said. “This isn't necessarily about creativity. It's about spreading the word, and the more you can do that, the kind of acceleration that AI offers, the more you are doing God's work.”

Laughlin said that the Christian early adoption of new technologies and media goes back 100 years. Christian media flourished on the radio, then turned to televangelism, and similarly made the transition to online media, with an entire world of religious influencers, sites, and apps.

“The fear among Christians is that if they don't immediately jump onto a technology they're going to be left behind, and they're going to start losing people,” Laughlin said. The thinking is that if Christians are “not high tech in a high tech country where that's what's really grabbing people's attention, then they lose the war for attention to the other side, and losing the war for attention to the other side has really drastic spiritual consequences if you think of it in that frame,” she said.

Laughlin said that, especially among evangelical Christians, there’s a willingness to adopt new technologies that veers into boosterism. She said she saw Christians similarly try to jump on the metaverse hype train back when Silicon Valley insisted that virtual reality was the future, with many Christians asking how they’re going to build a Metaverse church because that’s where they thought people were going to be.

I asked Laughlin why it seems like secular and religious positions on new technologies seemed to have flipped. When I was growing up, it seemed like religious organizations were very worried that video games, for example, were corrupting young souls and turning them against God, especially when they overlapped with Satanic Panics around games like Doom or Diablo. When it comes to AI, it seems like it’s mostly secular culture—academics, artists, and other creatives—who shun generative AI for exploiting human labor and the creative spirit. In fact, many AI accelerationists accuse any critics of the technology or a desire to regulate it as a kind of religious moral panic. Christians, on the other hand, see AI as part of the inevitable march of technological progress, and they want to be a part of it.

“It’s like the famous Marshall McLuhan quote, ‘the medium is the message,’ right? If they’re getting out there in the message of the time, that means the message is still fresh. Christians are still relevant in the AI age, and they're doing it and like that in itself is all that matters,” Laughlin said. “Even if it's clearly something that anybody could rightfully sneer at if you had any sense of what makes good or bad media aesthetics.”

From 404 Media via this RSS feed

Subscribe

Join the newsletter to get the latest updates.

SuccessGreat! Check your inbox and click the link.ErrorPlease enter a valid email address.

The IRS open sourced much of its incredibly popular Direct File software as the future of the free tax filing program is at risk of being killed by Intuit’s lobbyists and Donald Trump’s megabill. Meanwhile, several top developers who worked on the software have left the government and joined a project to explore the “future of tax filing” in the private sector.

Direct File is a piece of software created by developers at the US Digital Service and 18F, the former of which became DOGE and is now unrecognizable, and the latter of which was killed by DOGE. Direct File has been called a “free, easy, and trustworthy” piece of software that made tax filing “more efficient.” About 300,000 people used it last year as part of a limited pilot program, and those who did gave it incredibly positive reviews, according to reporting by Federal News Network.

But because it is free and because it is an example of government working, Direct File and the IRS’s Free File program more broadly have been the subject of years of lobbying efforts by financial technology giants like Intuit, which makes TurboTax. DOGE sought to kill Direct File, and currently, there is language in Trump’s massive budget reconciliation bill that would kill Direct File. Experts say that “ending [the] Direct File program is a gift to the tax-prep industry that will cost taxpayers time and money.”

That means it’s quite big news that the IRS released most of the code that runs Direct File on Github last week. And, separately, three people who worked on it—Chris Given, Jen Thomas, Merici Vinton—have left government to join the Economic Security Project’s Future of Tax Filing Fellowship, where they will research ways to make filing taxes easier, cheaper, and more straightforward. They will be joined by Gabriel Zucker, who worked on Direct File as part of Code for America.

From 404 Media via this RSS feed

We start this week with Sam's dive into a looming piece of anti-porn legislation, prudish algorithms, and eggs. After the break, Matthew tells us about the open source software that powered Ukraine's drone attack against Russia. In the subscribers-only section, Emanuel explains how even pro-AI subreddits are dealing with people having AI delusions.

Listen to the weekly podcast on Apple Podcasts,Spotify, or YouTube. Become a paid subscriber for access to this episode's bonus content and to power our journalism. If you become a paid subscriber, check your inbox for an email from our podcast host Transistor for a link to the subscribers-only version! You can also add that subscribers feed to your podcast app of choice and never miss an episode that way. The email should also contain the subscribers-only unlisted YouTube link for the extended video version too. It will also be in the show notes in your podcast player.

The Egg Yolk Principle: Human Sexuality Will Always Outsmart Prudish Algorithms and Hateful PoliticiansUkraine's Massive Drone Attack Was Powered by Open Source SoftwarePro-AI Subreddit Bans 'Uptick' of Users Who Suffer from AI Delusions

From 404 Media via this RSS feed

A metal fork drags its four prongs back and forth across the yolk of an over-easy egg. The lightly peppered fried whites that skin across the runny yolk give a little, straining under the weight of the prongs. The yolk bulges and puckers, and finally the fork flips to its sharp points, bears down on the yolk and rips it open, revealing the thick, bright cadmium-yellow liquid underneath. The fork dips into the yolk and rubs the viscous ovum all over the crispy white edges, smearing it around slowly, coating the prongs. An R&B track plays.

#popping_yolks #eggs #food #yummy #watchmepop #foodporn #pop #poppingyolk @Foodporn

People in the comments on this video and others on the Popping Yolks TikTok account seem to be a mix of pleased and disgusted. “Bro seriously Edged till the very last moment,” one person commented. “It’s what we do,” the account owner replied. “Not the eggsum 😭” someone else commented on another popping video.

The sentiment in the comments on most content that floats to the top of my algorithms these days—whether it’s in the For You Page on TikTok, the infamously malleable Reels algo on Instagram, X’s obsession with sex-stunt discourse that makes it into prudish New York Times opinion essays—is confusion: How did I get here? Why does my FYP think I want to see egg edging? Why is everything slightly, uncomfortably, sexual?

If right-wing leadership in this country has its way, the person running this account could be put in prison for disseminating content that's “intended to arouse.” There’s a nationwide effort happening right now to end pornography, and call everything “pornographic” at the same time.

Much like anti-abortion laws don’t end abortion, and the so-called war on drugs didn’t “win” over drugs, anti-porn laws don’t end the adult industry. They only serve to shift power from people—sex workers, adult content creators, consumers of porn and anyone who wants to access sexual speech online without overly-burdensome barriers—to politicians like Senator Mike Lee, who is currently pushing to criminalize porn at the federal level.

Everything is sexually suggestive now because on most platforms, for years, being sexually overt meant risking a ban. Not-coincidentally, being horny about everything is also one of the few ways to get engagement on those same platforms. At the same time, legislators are trying to make everything “pornographic” illegal or impossible to make or consume.

Screenshot via Instagram

The Interstate Obscenity Definition Act (IODA), introduced by Senator Lee and Illinois Republican Rep. Mary Miller last month, aims to change the Supreme Court’s 1973 “Miller Test” for determining what qualifies as obscene. The Miller Test assesses material with three criteria: Would the average person, using contemporary standards, think it appeals to prurient interests? Does the material depict, in a “patently offensive” way, sexual conduct? And does it lack “serious literary, artistic, political, or scientific” value? If you’re thinking this all sounds awfully subjective for a legal standard, it is.

But Lee, whose state of Utah has been pushing the pseudoscientific narrative that porn constitutes a public health crisis for years, wants to redefine obscenity. Current legal definitions of obscenity include “intent” of the material, which prohibits obscene material “for the purposes of abusing, threatening, or harassing a person.” Lee’s IODA would remove the intent stipulation entirely, leaving anyone sharing or posting content that’s “intended to arouse” vulnerable to federal prosecution.

💡Do you know anything else about how platforms, companies, or state legislators are ? I would love to hear from you. Using a non-work device, you can message me securely on Signal at sam.404 Otherwise, send me an email at sam@404media.co.

IODA also makes an attempt to change the meaning of “contemporary community standards,” a key part of obscenity law in the U.S. “Instead of relying on contemporary community standards to determine if a work is patently offensive, the IODA creates a new definition of obscenity which considers whether the material involves an ‘objective intent to arouse, titillate, or gratify the sexual desires of a person,’” First Amendment attorney Lawrence Walters told me. “This would significantly broaden the scope of erotic materials that are subject to prosecution as obscene. Prosecutors have stumbled, in the past, with establishing that a work is patently offensive based on community standards. The tolerance for adult materials in any particular community can be quite difficult to pin down, creating roadblocks to successful obscenity prosecutions. Accordingly, Sen. Lee’s bill seeks to prohibit more works as obscene and makes it easier for the government to criminalize protected speech.”

All online adult content creators—Onlyfans models, porn performers working for major studios, indie porn makers, people doing horny commissions on Patreon, all of romance “BookTok,” maybe the entire romance book genre for that matter—could be criminals under this law. Would the egg yolk popper be a criminal, too? What about this guy who diddles mushrooms on TikTok? What about these women spitting in cups? Or the Donut Daddy, who fingers, rips and slaps ingredients while making cooking content? Is Sydney Sweeney going to jail for intending to arouse fans with bathwater-themed soap?

What Lee and others who support these kinds of bills are attempting to construct is a legal precedent where someone stroking egg yolks—or whispering into a microphone, or flicking a wet jelly fungus—should fear not just for their accounts, but for their freedom.

Some adult content creators are pushing back with the skills they have. Porn performers Damien and Diana Soft made a montage video of them having sex while reciting the contents of IODA.

“The effect Lee’s bill would have on porn producers and consumers is obvious, but it’s the greater implications that scare us most,” they told me in an email. “This bill would hurt every American by infringing on their freedoms and putting power into the hands of politicians. We don’t want this government—or any well-meaning government in the future—to have the ability to find broader and broader definitions of ‘obscene.’ Today they use the word to define porn. Tomorrow it could define the actions of peaceful protestors.”

The law has defined obscenity narrowly for decades. “The current test for obscenity requires, for example, that the thing that's depicted has to be patently offensive,” Becca Branum, deputy director of the Center for Democracy and Technology’s Free Expression Project, told me in a call. “By defining it that narrowly, a lot of commercial pornography and all sorts of stuff is still protected by the First Amendment, because it's not patently offensive. This bill would replace that standard with any representation of “normal or perverted sexual acts” with the objective intent to arouse, titillate or gratify. And so that includes things like simulating depictions of sex, which are a huge part of all media. Sex sells, and this could sweep in any romcom with a sex scene, no matter how tame, just because it includes a representation of a sex act. It’s just an enormous expansion of what has been legally understood to be obscenity.”

IODA is not a law yet, and is still only a bill that has to make its way through the House and Senate before it winds up on the president’s desk, and Lee has failed to get versions of the IODA through in the past. But as I wrote at the time, we’re in a different political landscape. Project 2025 leadership is at the helm, and that manifesto dictates an end to all porn and prison for pornographers.

All of the legal experts and free speech advocates I spoke to said IODA is plainly unconstitutional. But it’s still worth taking seriously, as it’s illustrative of something much bigger happening in politics and society.

“There are people who would like to get all sexual material offline,” David Greene, senior staff attorney at the Electronic Frontier Foundation, told me. There are people who want to see all sexual material completely eradicated from public life, but “offline is [an] achievable target,” he said. “So in some ways it's laughable, but if it does gain momentum, this is really, really dangerous.”

Lee’s bill might seem to have an ice cube’s chance in hell for becoming law, but weirder things are happening. Twenty-two states in the U.S. already have laws in place that restrict adults’ access to pornography, requiring government-issued ID to view adult content. Fifteen more states have age verification bills pending. These bills share similar language to define “harmful material:”

“material that exploits, is devoted to, or principally consists of descriptions of actual, simulated, or animated display or depiction of any of the following, in a manner patently offensive with respect to minors: (i) pubic hair, anus, vulva, genitals, or nipple of the female breast; (ii) touching, caressing, or fondling of nipples, breasts, buttocks, anuses, or genitals; or (iii) sexual intercourse, masturbation, sodomy, bestiality, oral copulation, flagellation, excretory functions, exhibitions, or any other sexual act.”

Before the first age verification bills were a glimmer in Louisiana legislators’ eyes three years ago, sexuality was always overpoliced online. Before this, it was (and still is) SESTA/FOSTA, which amended Section 230 to make platforms liable for what users do on them when activity could be construed as “sex trafficking,” including massive swaths and sometimes whole websites in its net if users discussed meeting in exchange for pay, but also real-life interactions or and attempts to screen clients for in-person encounters—and imposed burdensome fines if they didn’t comply. Sex education bore a lot of the brunt of this legislation, as did sex workers who used listing sites and places like Craigslist to make sure clientele was safe to meet IRL. The effects of SESTA/FOSTA were swift and brutal, and they’re ongoing.

We also see these effects in the obfuscation of sexual words and terms with algo-friendly shorthand, where people use “seggs” or “grape” instead of “sex” or “rape” to evade removal by hostile platforms. And maybe years of stock imagery of fingering grapefruits and wrapping red nails around cucumbers because Facebook couldn’t handle a sideboob means unironically horny fuckable-food content is a natural evolution to adapt.

Now, we have the Take It Down act, which experts expect will cause a similar fallout: platforms that can’t comply with extremely short deadlines on strict moderation expectations could opt to ban NSFW content altogether.

Before either of these pieces of legislation, it was (and still is!) banks. Financial institutions have long been the arbiters of morality in this country and others. And what credit card processors say goes, even if what they’re taking offense from is perfectly legal. Banks are the extra-legal arm of the right.

For years, I wrote a column for Motherboard called “Rule 34,” predicated on the “internet rule” that if you can think of it, someone has made porn of it. The thesis, throughout all of the communities and fetishes I examined—blueberry inflationists, slime girls, self-suckers, airplane fuckers—was that it’s almost impossible to predict what people get off on. A domino falls—playing in the pool as a 10 year old, for instance—and the next thing you know you’re an adult hooking an air compressor up to a fuckable pool toy after work. You will never, ever put human sexuality in a box. The idea that someone like Mike Lee wants to try is not only absurd, it’s scary: a ruse set up for social control.

Much of this tension between laws, banks, and people plays out very obviously in platforms’ terms of use. Take a recent case: In late 2023, Patreon updated its terms of use for “sexually gratifying works.” In these guidelines, the platform twists itself into Gordian knots trying to define what is and isn’t permitted. For example, “sexual activity between a human and any animal that exists in the real world” is not permitted. Does this mean sex between humans and Bigfoot is allowed? What about depictions of sex with extinct animals, like passenger pigeons or dodos? Also not permitted: “Mouths, sex toys, or related instruments being used for the stimulation of certain body parts such as genitals, anus, breast or nipple (as opposed to hip, arm, or armpit which would be permitted).” It seems armpit-licking is a-ok on Patreon.

In September 2024, Patreon made changes to the guidelines again, writing in an update that it “added nuance under ‘Bestiality’ to clarify the circumstances in which it is permitted for human characters to have sexual interactions with fictional mythological creatures.” The rules currently state: “Sexual interaction between a human and a fictional mythological creature that is more humanistic than animal (i.e. anthropomorphic, bipedal, and/or sapient).” As preeminent poster Merritt K wrote about the changes, “if i'm reading this correct it's ok to write a story where a werewolf fucks a werewolf but not where a werewolf fucks a dracula.”

The platform also said in an announcement alongside the bestiality stuff: “We removed ‘Game of Thrones’ as an example under the ‘Incest’ section, to avoid confusion.” All of it almost makes you pity the mods tasked with untangling the knots, pressed from above by managers, shareholders, and CEOs to make the platform suitably safe and sanitary for credit card processors, and from below by users who want to sell their slashfic fanart of Lannister inter-familial romance undisturbed.

Patreon’s changes to its terms also threw the “adult baby/diaper lover” community into chaos, in a perfect illustration of my point: A lot of participants inside that fandom insist it’s not sexual. A lot of people outside find it obscene. Who’s correct?

As part of answering that question for this article, I tried to find examples of content that’s arousing but not actually pornographic, like the egg yolks. This, as it happens, is a very “I know it when I see it” type of thing. Foot pottery? Obviously intended to arouse, but not explicitly pornographic. This account of AI-generated ripped women? Yep, and there’s a link to “18+” content in the account’s bio. Farting and spitting are too obviously kinky to successfully toe the line, but a woman chugging milk as part of a lactose intolerance experiment then recording herself suffering (including closeups of her face while farting) fits the bill, according to my entirely arbitrary terms. Confirming my not-porn-but-still-horny assessment, the original video—made by user toot_queen on TikTok, was reposted to Instagram by the lactose supplement company Dairy Joy. Fleece straightjackets, and especially tickle sessions in them, are too recognizably BDSM. This guy making biscuits on a blankie? I guess, man. Context matters: Eating cereal out of a woman’s armpit is way too literal to my eye, but it’d apparently fly on Patreon no problem.

Obfuscating fetish and kink for the appeasement of payment processors, platforms and Republican senators has a history. As Jenny Sundén, a professor of gender studies at Södertörn University in Sweden, points out in her 2022 paper, philosopher Édouard Glissant presented the concept of “opacity” as a tactic of the oppressed, and a human right. She applied this to kink: “Opacity implies a lack of clarity; something opaque may be both difficult to see clearly as well as to understand,” Sundén wrote. “Kink communities exist to a large extent in such spaces of dimness, darkness and incomprehensibility, partly removed from public view and, importantly, from public understanding. Kink certainly enters the bright daylight of public visibility in some ways, most obviously through popular culture. And yet, there is something utterly incomprehensible about how desire works, something which tends to become heightened in the realm of kink as non-practitioners may struggle to ‘understand.’”

"We’ve seen similar attempts to redefine obscenity that haven’t gone very far. However, we’re living in an era when censorship of sexual content is broadly censored online, and the promises written in Project 2025 are coming true"

Opacity, she suggested, “works to overcome the risk of reducing, normalizing and assimilating sexual deviance by comprehension, and instead open up for new modes of obscure and pleasurable sexual expressions and transgressions on social media platforms.”

As the internet and society at large becomes more hostile to sex, actual sexual content has become more opaque. And because sex leads the way in engagement, monetization, and innovation on the internet, everything else has copied it, pretending it’s trying to evade detection even when there’s nothing to detect, like the fork and fried egg.

The point of eroding longstanding definitions of obscenity and precedent around intent and standards are all part of a journey back toward a world where the only sexuality one can legally experience is between legally married cisgender heterosexuals. We see it happen with book bans that call any mention of gender or sexuality “pornographic,” and with attacks on trans rights that label people’s very existence as porn.

"The IODA would be the first step toward an outright federal ban on pornography and an insult to existing case law. We’ve seen similar attempts to redefine obscenity that haven’t gone very far. However, we’re living in an era when censorship of sexual content is broadly censored online, and the promises written in Project 2025 are coming true,” Ricci Levy, president of the Woodhull Freedom Foundation, told me. “Banning pornography may not concern those who object to its existence, but any attempt by the government to ban and censor protected speech is a threat to the First Amendment rights we all treasure."

And as we saw with FOSTA/SESTA, and with the age verification lawsuits cropping up around the country recently—and what we’ll likely see happen now that the Take It Down Act has passed with extreme expectations placed on website administrators to remove anything that could infringe on nonconsensual content laws—platforms might not even bother to try to deal with the burden of keeping NSFW users happy anymore.

Even if IODA doesn't pass, and even if no one is ever prosecuted under it, “the damage is done, both in his introduction and sort of creating that persistent drum beat of attempts to limit people's speech,” Branum said.

But if it or a bill like it did pass in the future, prosecutors—in this scenario, empowered to dictate people’s speech and sexual interests—wouldn't even need to bring a case against someone for it to have real effects. “The more damaging and immediate effect would be on the chilling effect it'll have on everyone's speech in the meantime,” Branum said. “Even if I'm not prosecuted under the obscenity statute, if I know that I could be for sharing something as benign as a recording from my bachelorette party, I'm going to curtail my speech. I'm going to change my behavior to avoid attracting the government's ire. Even if they never brought a prosecution under this law, the damage would already be done.”

From 404 Media via this RSS feed

Open source software used by hobbyist drones powered an attack that wiped out a third of Russia’s strategic long range bombers on Sunday afternoon, in one of the most daring and technically coordinated attacks in the war.

In broad daylight on Sunday, explosions rocked air bases in Belaya, Olenya, and Ivanovo in Russia, which are hundreds of miles from Ukraine. The Security Services of Ukraine’s (SBU) Operation Spider Web was a coordinated assault on Russian targets it claimed was more than a year in the making, which was carried out using a nearly 20-year-old piece of open source drone autopilot software called ArduPilot.

ArduPilot’s original creators were in awe of the attack. “That's ArduPilot, launched from my basement 18 years ago. Crazy,” Chris Anderson said in a comment on LinkedIn below footage of the attack.

On X, he tagged his the co-creators Jordi Muñoz and Jason Short in a post about the attack. “Not in a million years would I have predicted this outcome. I just wanted to make flying robots,” Short said in a reply to Anderson. “Ardupilot powered drones just took out half the Russian strategic bomber fleet.”

ArduPilot is an open source software system that takes its name from the Arduino hardware systems it was originally designed to work with. It began in 2007 when Anderson launched the website DIYdrones.com and cobbled together a UAV autopilot system out of a Lego Mindstorms set (Anderson is also the former editor-in-chief of WIRED.)

DIYdrones became a gathering place for UAV enthusiasts and two years after Anderson’s Lego UAV took flight, a drone pilot named Jordi Muñoz won an autonomous vehicle competition with a small helicopter that flew on autopilot. Muñoz and Anderson founded 3DR, an early consumer drone company, and released the earliest versions of the ArduPilot software in 2009.

ArduPilot evolved over the next decade, being refined by Muñoz, Anderson, Jaron Short, and a world of hobbyist and professional drone pilots. Like many pieces of open-source software, it is free to use and can be modified for all sorts of purposes. In this case, the software assisted in one of the most complex series of small drone strikes in the history of the world.

“ArduPilot is a trusted, versatile, and open source autopilot system supporting many vehicle types: multi-copters, traditional helicopters, fixed wing aircraft, boats, submarines, rovers and more,” the project’s website reads. “The source code is developed by a large community of professionals and enthusiasts. New developers are always welcome!” The project’s website notes that “ArduPilot enables the creation and use of trusted, autonomous, unmanned vehicle systems for the peaceful benefit of all” and that some of its use cases are “search and rescue, submersible ROV, 3D mapping, first person view [flying], and autonomous mowers and tractors.” It does not highlight that it has been repurposed by Ukraine for war. Website analytics from 2023 showed that the project was very popular in both Ukraine and Russia, however.

The software can connect to a DIY drone, pull up a map of the area they’re in that’s connected to GPS, and tell the drone to take off, fly around, and land. A drone pilot can use ArduFlight to create a series of waypoints that a drone will fly along, charting its path as best it can. But even when it is not flying on autopilot (which requires GPS; Russia jams GPS and runs its own proprietary system called GLONASS), it has assistive features that are useful.

ArduPilot can handle tasks like stabilizing a drone in the air while the pilot focuses on moving to their next objective. Pilots can switch them into loitering mode, for example, if they need to step away or perform another task, and it has failsafe modes that keep a drone aloft if signal is lost.

Wow. Ardupilot powered drones just took out half the Russian strategic bomber fleet. https://t.co/5juA1UXrv4

— Jason Short (@jason4short) June 1, 2025

According to Ukrainian president Volodymyr Zelensky, the preparation for the attack took a year and a half. He also claimed that the Ukraine’s office for the operation in Russia was across the street from a Russian intelligence headquarters.

“In total, 117 drones were used in the operation--with a corresponding number of drone operators involved,” he said in a post about the attack. “34 percent of the strategic cruise missile carriers stationed at air bases were hit. Our personnel operated across multiple Russian regions – in three different time zones. And the people who assisted us were withdrawn from Russian territory before the operation, they are now safe.”

SBU was quick to claim responsibility for the attack and then explain how it accomplished it. It snuck sheds and trucks filled with quadcopters loaded down with explosives into the country in trucks and shipping containers over the past 18 months. The sheds had false roofs lined with quadcopters. When signalled, the trucks and roofs opened and the drones took flight. Multiple video clips shared across the internet showed that the flights were conducted using ArduPilot.

Ukraine’s raid on Russia may seem like a hinge point in the history of modern war: a moment when the small quadcopter drone proved its worth. The truth is Operation Spider Web conducted by a military that’s been using DIY and consumer-level drones to fight Russia for a decade. Both sides have proved capable of destroying expensive weapons systems with simple drones. Now Ukraine has proved it can use all that knowledge as part of a logistically complicated attack on Russia’s strategic military assets deep within its homeland.

ArduPilots’s current devs didn’t respond to 404 Media’s request for comment, but one of them talked about the attack on /r/ArduPilot. “ArduPilot project is aware of those usage not the first time, probably not the last,” the developer said. “We won't discuss or debate our stance, we [focus] on giving you the best tools to move your [vehicles] safely. That is our mission. The rest is for UN or any organisms that can deal with ethical questions.”

The developer also linked to ArduPilot’s code of conduct. The code of conduct contains a pledge from developers that states they will try to “not knowingly support or facilitate the weaponization of systems using ArduPilot.” But ArduPilot isn’t a product for sale and the code of conduct isn’t an end user license agreement. It’s open source software and anyone can download it, tweak it, and use it however they wish, and Ukraine’s drone pilots seem to have found it to be very useful.

For a few years, massive industrial hexacopter and quadcopter drones the Russians call Baba Yaga have terrorized their soldiers and armor. The Russians have downed a few of these drones and discovered they run off a Starlink terminal attached to the top. In a Baba Yaga seizure reported in February on Russian Telegram channels, soldiers said they found traces of ArduPilot in the drone’s hardware.

The drones used in Sunday’s attack didn’t run on Starlinks and were much smaller than the Baba Yaga. Early analysis from Russian military bloggers on Telegram indicates that the drones communicated back to their Ukrainian handlers via Russian mobile networks using a simple modem that’s connected to a Raspberry Pi-style board.

This method hints at another reason Ukraine might be using ArduPilot for this kind of operation: latency. A basic PC on a quadcopter in Russia that’s sending a signal back and forth to an operator in Ukraine isn’t going to have a low ping. Latency will be an issue and ArduPilot can handle basic loitering and stabilization as the pilot’s signal moves across vast distances on a spotty network.

The use of free, open source software to pull off a military mission like this also highlights the asymmetric nature of the Russia-Ukraine war. Cheap quadcopters and DIY drones running completely free software are regularly destroying tanks and bombers that cost millions of dollars and can’t be easily replaced.

Ukraine’s success with drones has rejuvenated the market for smaller drones in the United States. The American company AeroVironment produces the Switchblade 300 and 600. Switchblades are a kind of loitering munition that can accomplish the mission of a quadcopter, but at tens of thousands dollars more per drone than what Ukraine paid for Operation Spider Web.

Palmer Luckey’s Anduril is also selling quadcopter drones that run on autopilot. He’s even got a quadcopter, called the Anvil, that runs on proprietary software packages. While we don’t know the per unit cost of the system, it did sell the U.S. Marines a $200 million system that includes the Anvil and its suite of software in 2024.

In modern war, the battlefield belongs to those who can innovate while keeping costs down. “I think the single biggest innovation in drone-use warfare is the scale allowed by cheap drones with good-enough software,” Kelsey Atherton, a drone expert and the chief editor at Center for International Policy told 404 Media.